Data Visualisation Coursework Assignment Sample

Report

You are asked to carry out an analysis of a dataset and to present your findings in the form of a maximum of two (2) visualisations, (or a single (1) dashboard comprising a set of linked sub-visualisations) along with an evaluation of your work.

You should find one or more freely available dataset(s) on any topic, (with a small number of restrictions, see below) from a reliable source. You should analyse this data to determine what the data tells you about its particular topic and should visualise this data in a way that allows your chosen audience to understand the data and what the data shows. You should create a maximum of two (2) visualisations of this data that efficiently and effectively convey the key message from your chosen data.

It should be clear from these visualisations what the message from your data is. You can use any language or tool you like to carry out both the analysis and the visualisation, with a few conditions/restrictions, as detailed below. All code used must be submitted as part of the coursework, along with the data required, and you must include enough instructions/information to be able to run the code and reproduce the analysis/visualisations.

Dataset Selection

You are free to choose data on any topic you like, with the following exceptions. You cannot use data connected to the following topics:

1. COVID-19. I’ve seen too many dashboards of COVID-19 data that just replicate the work of either John Hopkins or the FT, and I’m tired of seeing bar chart races of COVID deaths, which are incredibly distasteful. Let’s not make entertainment out of a pandemic.

2. World Happiness Index. Unless you are absolutely sure that you’ve found something REALLY INTERESTING that correlates with the world happiness index, I don’t want to see another scatterplot comparing GDP with happiness. It’s been done too many times.

3. Stock Market data. It’s too dull. Treemaps of the FTSE100/Nasdaq/whatever index you like are going to be generally next to useless, candle charts are only useful if you’re a stock trader, and I don’t get a thrill from seeing the billions of dollars hoarded by corporations.

4. Anything NFT/Crypto related. It’s a garbage pyramid scheme that is destroying the planet and will likely end up hurting a bunch of people who didn’t know any better.

Solution

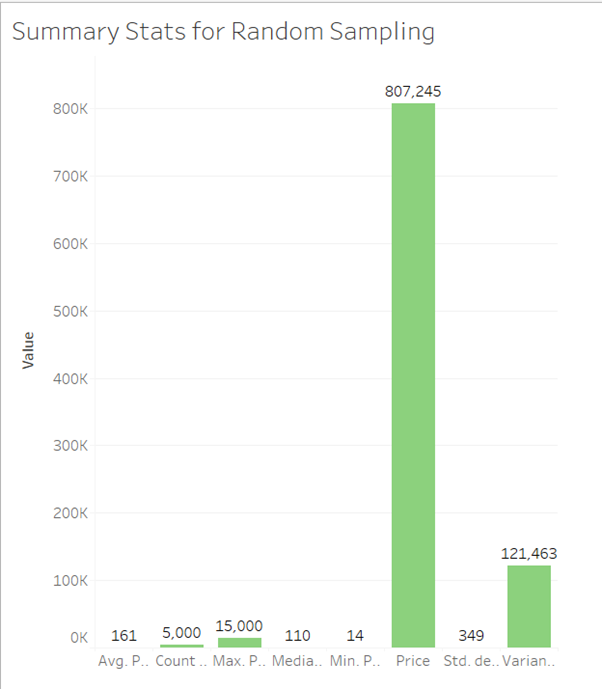

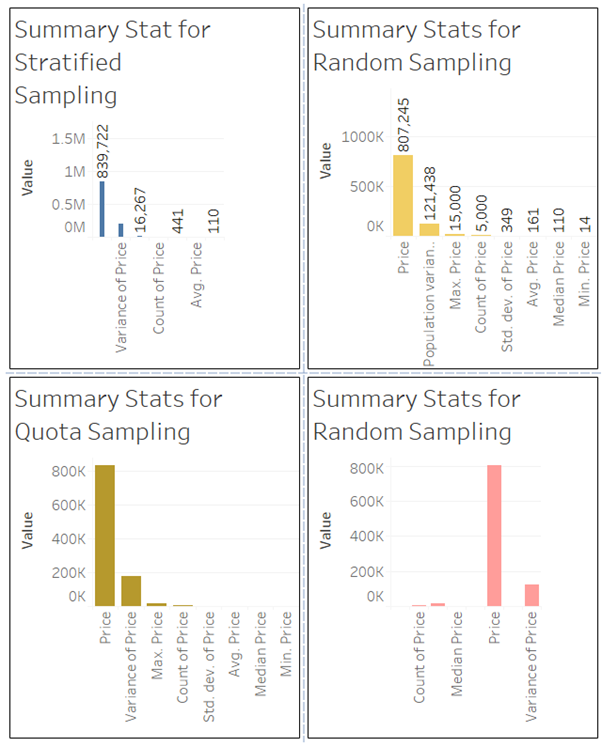

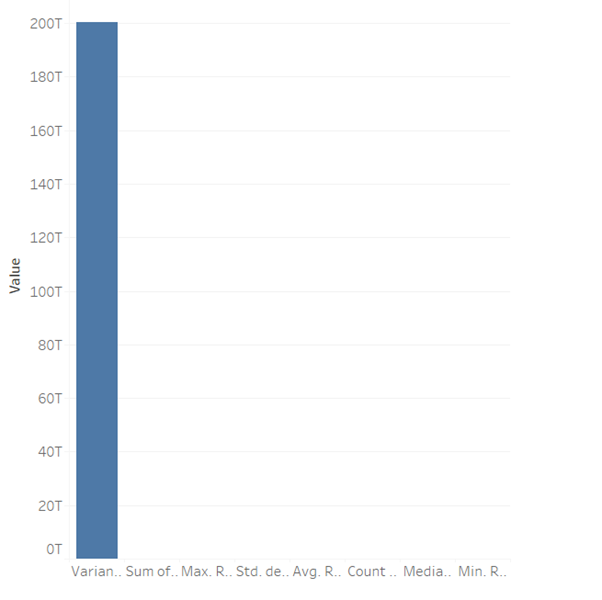

The data used for this reflective study is from the World Development Indicators. In this, the dataset consists of information regarding the trade business, income factors for different regions and countries and income groups as well. So, a dashboard is created for assignment help with the help of Tableau Software using the two datasets, named as counry and country codes. The form of presentation used is a bar graph (Hoekstra and Mekonnen, 2012).

1. Trade data, Industrial data and Water withdrawal data vs regions.

.png)

Figure 1: Region vs Trade data, Industrial data and Water withdrawal data.

The first visualization created is about the Trading data, Industrial data and Water Withdrawl data. All three data are presented together with a comaprison in different regions to get an overview of all the regions and their holding place in the following trading sectors. For the Tading data in several regions, it is clear that the leading area is europe and central asia, with the maximum occupancy of 98,600, while the count is nearly equal to the water withdrawl count with a differnce of 311 only. But in this region, the industrial count is only 82,408, yet the highest in all data taken.

The next leading region is Sub Suharan Africa, which is only for the Tade data and Water Withdrawl data. While the leading region for industrial data is Middle East and North Africa.

Overall, these findings suggest that Europe and Central Asia offer the most significant opportunities for businesses and organizations in terms of Trading and Industrial sectors. Meanwhile, Sub-Saharan Africa and Latin America and Caribbean offer promising opportunities in the Trading sector, and the Middle East and North Africa have potential in the Industrial sector.

These findings also highlight the need for policymakers to focus on improving access to resources and infrastructure in regions where the count of these data is lower, to boost economic growth and development. The visualization depends on several factors, such as the choice of visual encoding, the clarity of the labels and titles, and the overall design of the visualization. Therefore, it can be considered as a successful visualization.

Moreover, the visualization provides a comprehensive overview of the data, allowing viewers to understand the relationships and patterns between the different sectors and regions. The correlation of Exchanging, Modern, and Water Withdrawal information in various locales additionally permits watchers to rapidly recognize what districts are driving in every area and which ones have potential for development.

The analysis provided in the visualization also adds value by highlighting the implications of the data, such as the need for policymakers to focus on improving access to resources and infrastructure in regions where the count of these data is lower to boost economic growth and development. This contextual information helps viewers to understand the underlying causes and implications of the data, providing a more complete picture of the situation (Batt et al., 2020).

Furthermore, the analysis provides insights into the regions that offer the most significant opportunities for businesses and organizations in terms of trading and industrial sectors, and the regions that have potential for growth and development. This information can be valuable for policymakers and stakeholders looking to invest in or improve infrastructure and resources in these regions.

The visualizations are well-designed, using different colours to represent a group, with proper labels and tags on it to make it easily understandable for the viewers, so it is a success on the completion of the visualizations. Although, it is important that additional analysis and contextual information may also be required to understand the underlying causes and implications of the data.

2. Source of income and expenditure cost for different income groups and regions.

.png)

Figure 2: Count of source of income and expenditure cost for different income groups and regions.

This visualization is about the income group in different regions and the comparison of count of source of income and the expenditure in total. This provides a well information of the data for all class of groups regarding income and their data.

One key observation is that the lower middle-income group seems to have more balanced results compared to other income groups. However, there are still significant difficulties faced by people in South Asia, where the count of income sources is low for all income groups.

Another important observation is that Sub Saharan Africa appears to have the highest count for the source of income overall, while Latin America and the Caribbean have the highest count for the upper middle-income group. On the other hand, the Middle East & Africa and North America have the lowest count of income sources among the high-income group, which indicates that there is a significant disparity in income sources and expenditures across different regions. It is important to create more opportunities for income generation and improve access to education, training, and resources to enable people to improve their income and standard of living (Lambers and Orejas, 2014).

The visualization effectively communicates the findings about the disparities in income sources and expenditures across different regions and income groups. It highlights the areas where there are significant difficulties faced by people, such as in South Asia where the count of income sources is low for all income groups. The perception likewise gives significant experiences into the areas where there are open doors for money age, like in Sub Saharan Africa and Latin America and the

Caribbean.

Generally, this perception is an outcome in imparting complex data about pay gatherings and their kinds of revenue and consumptions in an unmistakable and reasonable manner. It successfully features the incongruities between various locales and pay gatherings and the requirement for approaches and projects to further develop admittance to schooling, preparing, and assets to empower individuals to work on their pay and way of life.

References

.png)

MIS602 IT Report

Task Instructions

Please read and examine carefully the attached MIS602_Assessment 2_Data Implementation_ Case study and then derive the SQL queries to return the required information. Provide SQL statements and the query output for the following:

1 List the total number of customers in the customers table.

2 List all the customers who live in any part of CLAYTON. List only the Customer ID, full name, date of birth and suburb.

3 List all the staff who have resigned.

4 Which plan gives the biggest data allowance?

5 List the customers who do not own a phone.

6 List the top two selling plans.

7 What brand of phone(s) is owned by the youngest customer?

8 From which number was the oldest call (the first call) was made?

9 Which tower received the highest number of connections?

10 List all the customerIDs of all customers having more than one mobile number.

Note: Only CustomerId should be displayed.

11 The company is considering changing the plan durations with 24 and 36 days to 30 days.

(a) How many customer will be effected? Show SQL to justify your answer.

(b) What SQL will be needed to update the database to reflect the upgrades?

12 List the staffId, full name and supervisor name of each staff member.

13 List all the phone numbers which have never made any calls. Show the query using:

i. Nested Query

ii. SQL Join

14 List the customer ID, customer name, phone number and the total number of hours the customer was on the phone during August of 2019 from each phone number the customer owns. Order the list from highest to the lowest number of hours.

15 i. Create a view that shows the popularity of each phone colour.

ii. Use this view in a query to determine the least popular phone colour.

16 List all the plans and total number of active phones on each plan.

17 List all the details of the oldest and youngest customer in postcode 3030.

18 Produce a list of customers sharing the same birthday.

19 Find at least 3 issues with the data and suggest ways to avoid these issues.

20 In not more 200 words, explain at least two ways to improve this database based on what you have learned in weeks 1-8.

Solution

Introduction

A database management system for assignment help can be defined as the program that is mainly used for defining, developing, managing as well as controlling database access. A successful Information System gives precise, convenient and significant data to clients so that it very well may be utilized for a better decision-making process. The decision-making process should be founded on convenient and appropriate information and data so that the decisions can be based on the business objective. The role of DBMS in an information system is to minimize and eliminate data redundancy and also maximize data consistency (Saeed, 2017). In this assessment, the case study of a mobile phone company has been given. The phone as well as its plans are sold by employees to their clients with some specific features. The calls are charged on the basis of minutes in cents. The plan durations start from month. The main purpose of this assessment is to understand requirement for various data information requests from given database structure and develop SQL statements for the given queries.

Database Implementation

As the database is designed on the basis of the given ERD diagram, it's time to implement the database design. MySQL database has been used for implementing the database (Eze & Etus, 2014). The main reason for using MySQL for this database implementation is a free version is available on internet. This database engine is flexible with many programming languages. A good security is also provided with MySQL database (Susanto & Meiryani, 2019).

Entity Relationship Diagram

The given ERD diagram for this mobile phone company database is as following:

.png)

Implementation of Database for Mobile Phone Company

Database and Table Creation

Create schema Mobile_Phone_Company;

Use Mobile_Phone_Company;

Table Structure

Staff

.png)

Customer

.png)

Mobile

.png)

Plan

.png)

Connect

.png)

Calls

.png)

Tower

.png)

Data Insertion

Staff

Customer

Mobile

Plan

Connect

Calls

Tower

SQL Queries

1. Total number of customers

Select Count(CustomerID) from Customer;

2. Customers in CLAYTON

Select CustomerID, Concat(Given,' ',Surname) as FullName, DOB, Suburb from customer

Where suburb like 'CLAYTON';

3. Resigned Staff

Select * from staff where Resigned is not null ;

4. Biggest Data Allowance Plan

SELECT PlanNAme, BreakFee, Max(DataAllowance), MonthlyFee,

PlanDuration, CallCharge from PLAN ;

5. Customers who don’t have phone

SELECT CustomerID, CONCAT(Given,' ',Surname) AS FullName, DOB, Suburb

from Customer WHERE NOT EXISTS (Select CustomerID from Mobile

WHERE Mobile.CustomerID=Customer.CustomerID);

.png)

6. Top two selling plans

SELECT Mobile.PlanName, BreakFee, DataAllowance, MonthlyFee, PlanDuration, CallCharge,

COUNT(Mobile.PlanName)

FROM Mobile, Plan WHERE

Mobile.PlanName=Plan.PlanName

GROUP BY

Mobile.PlanName, BreakFee, DataAllowance, MonthlyFee, PlanDuration, CallCharge;

7. Brand owned by youngest customers

SELECT BrandName from mobile WHERE

CustomerID = (SELECT customerid From Customer where

Mobile.customerid=customer.customerid and

dob=(select max(dob) From Customer) );

.png)

8. The first call made by which number

SELECT mobile.phonenumber, calls.calldate

from mobile, calls where

calls.mobileid=mobile.mobileid and

calls.calldate=(select min(calldate) from calls);

9. Tower that received the highest number of connections

SELECT * from Tower WHERE

MaxConn=(Select Max(MaxConn) from Tower);

.png)

10. Customers who have more than one mobile number.

SELECT CustomerID from mobile

Group By CustomerID HAVING Count(PhoneNumber)>1;

.png)

11. (a) Number of customers affected

SELECT Count(CustomerID) from Mobile, plan where

mobile.planname=plan.planname and

planduration in(24,36);

.png)

(b) Update database

Update Plan set planduration=30

where planduration in (24,36);

12. Staff members

Select S1.StaffID, CONCAT(S1.Given,' ',S1.Surname) AS FullName,

CONCAT(S2.Given,' ',S2.Surname) AS SupervisorName From

Staff S1, Staff S2 where

S2.staffid=s1.supervisorid;

13. Phone number which have not made any call

Nested Query

SELECT PhoneNumber from mobile

where not exists

(Select MobileID from calls where calls.mobileid=mobile.mobileid);

SQL Join

SELECT PhoneNumber from mobile Left Join

calls on calls.mobileid=mobile.mobileid

where not exists

(Select MobileID from calls where calls.mobileid=mobile.mobileid);

14. List the customer ID, customer name, phone number and the total number of hours the customer was on the phone during August of 2019 from each phone number the customer owns. Order the list from highest to the lowest number of hours.

.png)

select mobile.customerid, CONCAT(Customer.Given,'',Customer.Surname) AS FullName,

mobile.phonenumber, sum(calls.callduration) as NoOfHours, calls.calldate

from calls, mobile, customer where

calls.mobileid=mobile.mobileid and

mobile.customerid=customer.customerid and

month(calls.calldate)=8 and year(calls.calldate)=2019 group by

mobile.customerid, Customer.Given, Customer.Surname,

mobile.phonenumber, calls.callduration

Order by calls.callduration desc;

15. (i) View Creation

Create or Replace View view_color as Select PhoneColour, Count(MobileID) AS MobileNum From Mobile

Group By PhoneColour;

(ii) View Query

Select PhoneColour, Min(MobileNum) from view_color ;

.png)

16. Active phone plans

Select mobile.planname, count(mobile.phonenumber) from

mobile, plan where mobile.planname=plan.planname and

mobile.cancelled is not null

group by mobile.planname;

17. Oldest and youngest customer

Select * from customer where dob =

(select max(dob) from customer)

UNION

Select * from customer where dob =

(select min(dob) from customer);

18. Customers with same birthdays

select c.customerid, c.given, c.surname, c.DOB from customer c group by dob having count(dob)>1 order by dob;

Issues with the data

The main issues with the data are as following:

- The relationship in the staff table for defining a supervisor is complicated as it is self joined to maintain the relationship.

- The overall relationship between tower, plan, mobile and calls is very complicated.

- A clean data policy is not used for data insertion.

Ways to improve the database

As we know that the third normalized form has been used to define the database structure, but still some steps can be possible to improve this database. Its difficult to make a self join relationship in a single table, that is used in the Staff table. Hence, a different table can be used for making a list of supervisors. On the other hand, a mobile has a plan and make calls and the calls are made from the tower listed in the tower table.

Secondly, in order to secure this database, an authorized data access is required. It implies that only that much data could be retrieved that is required. The full data access privileges must be given to the administrator or a top management official who actual requires all data reports in order to make better decision making.

References

.png)

MIS500 Foundation of Information System Assignment Sample

Introduction to Chanel

Chanel is a French luxury fashion house that was founded by Coco Chanel in 1910. It focuses on women's high fashion and ready to wear clothes, luxury goods, perfumes and accessories. The company is currently privately owned by the Wertheimer family This is one of the last family businesses in the world of fashion and luxury with revenues of €12.8 billion (2019) and net income €2,14 billion (2019).

Chanel – Case Study To complete this assessment task you are required to design an information system for Chanel to assist with their business. You have discussed Porter’s Value Chain in class and you should understand the primary and support activities within businesses. For this assessment you need to concentrate on Marketing and Sales and how Chanel cannot survive without a Digital Strategy.

Source: Porter’s Value Chain Model (Rainer & Prince, 2019),

Read the Chanel case study which will be distributed in Class during Module 3.1. Visit these websites

https://www.chanel.com/au/

Based on the information provided as well as your own research (reading!) into information systems write a report for Chanel to assist them in developing a ‘Digital Strategy’ to develop insights for their marketing and sales especially in online sales. Please structure the group report as follows:

- Title page

- Introduction

- Background to the issue you plan to solve

- Identify and articulate the case for a Digital Strategy at Chanel (based upon the data do you as a group of consultants agree or disagree)

- Research the issues at Chanel and present a literature review – discuss the marketing and sales data analysis needs and the range of BI systems available to meet these needs.

- Recommended Solution – explain the proposed Digital Strategy and information systems and how it will assist the business. You may use visuals to represent your ideas.

- Conclusion

- References (quality and correct method of presentation. You must have a minimum of 15 references)

Solution

Introduction

Businesses are upgrading their business operations by implementing a digital strategy in order to compete against rivals and stay in business. In doing so, companies must continuously keep adjusting their business strategies and procedures to keep attracting the newer generation of customers or else face a certain doom. The paper is based on Chanel, a posh fashion brand based in Neuilly-sur-Seine, France. The Chanel’s business challenges in the market place are briefly assessed and examined in this research. In addition, the paper will briefly outline the advertising and marketing process, as well as how Chanel should embrace a digital strategy to maintain growth in the following decade.

Background to the issue you plan to solve

The issue is that the luxury brands, such as Chanel are lagging behind the rapidly developing trend of e-commerce and they need to implement a comprehensive Digital Strategy in order to keep their existing customers and expand their market shares. Traditionally, luxury brand companies considered online shopping as a platform for lower-end products and did not focus on investing in their social presence (Dauriz, Michetti, et al., 2014). However, the rapid development of online shopping platforms and changing behaviour of customers, coupled by lockdown measures and cross-border restrictions due to COVID-19 pandemic has exposed the importance of digital-based sales and marking even for the luxury brands which heavily depend on in-person retail sales (McKinsey & Company, 2021). Fashion experts warn that luxury companies will not survive the current crisis caused unless they invest in their digital transformation (Gonzalo et al., 2020).

According to the global e-commerce outlook report for assignment help carried out by the CBRE which is the world's largest commercial real estate services and investment firm, online retail sales accounted for 18 per cent of global retail sales in 2020 which is 140 per cent increase in the last five years and expected to reach 21.8 per cent in 2024 (CBRE, 2021). On the other hand, as digital technology advances, the customer's behavior is changing rapidly in a way that they do not only prefer to make their purchases online but also make a decision based on their online search (Dauriz, Michetti, et al., 2014). However, e-commerce accounted for only 4 per cent of luxury sales in 2014 (Dauriz, Remy, et al., 2014) and it reached just 8 per cent in 2020 (Achille et al., 2018). It shows that luxury brands are slow to adapt into the changing of environment of global trade and customers' behavior. On the other hand, at least 45 per cent of all luxury sales is influenced by the customers' social media experience and the company's digital marketing (Achille et al., 2018). However, almost 30 per cent of luxury sales are made by the tourists travelling outside their home countries, therefore luxury industry has adversely impacted by the current cross-border travel restrictions. In addition, fashion weeks and trade shows were disrupted by almost two years due to the pandemic. Therefore, fashion experts suggest luxury companies to enhance its digital engagement with their customers and to digitalize their supply chains (Achille & Zipser, 2020).

Chanel is the leading luxury brand for women's couture in the world. Its annual revenue is $2.54 billion which is one of the highest in the world (Statista, 2021). Chanel's digital presence is quite impressive. It is one of the "most pinned brands" on social media, having pinned by 1,244 times per day (Marketing Tips For Luxury Brands | Conundrum Media, n.d.). It has 57 million followers in social media and its posts are viewed by 300 million people in average (Smith, 2021). It has also been commended by some fashion experts for its "powerful narrative with good content" for marketing on social media (Smith, 2021). However, it has also been criticized for its poor websites that is not user-friendly (Taylor, 2021) and its reluctance on e-commerce (Interino, 2020). Therefore, Chanel needs to improve its digital presence, developing a comprehensive Digital Strategy.

Identify and articulate the case for a Digital Strategy at Chanel

Case for digital strategy at Chanel

As-Is State

After reviewing the Chanel case, as consultants, we are now all dissatisfied with the Chanel company's digital strategy. While making any kind of choice, businesses must first comprehend the customer's perspective. The current state of the firm's commerce was already determined based on the provided example, with the existing web-based platform employed by the company being fairly restrictive for them. For instance, in less than 9 nations, the company has built an eCommerce platform offering cosmetics and fragrance. The firm's internet penetration activity is lower than that of other industry players. The business only offers a restricted set of e-services offerings. Not only that, but the organisation uses many systems and databases in various geographical regions, which provides a disfranchised experience to the end consumers. Besides that, the company is encountering technological organisation issues, such as failing adequately align existing capabilities with forthcoming challenges and employing diverse models, all of which add to the business's complication. Simultaneously, their social media marketing is grossly insufficient, failing to reach the target luxury audience as it should.

To -Be State

Following an examination of the firm's present digital strategy, it was discovered that the company has a number of potential opportunities that it must pursue in order to effectively stay competitive in the market. The major goal of the Chanel firm, according to analysis and research, is to improve the customer experience, bring new consumers, establish brand connection and inspire advocacy, and raise product awareness . It has been determined that Chanel's digital strategy is outdated, as a result of which the company is unable to successfully compete with its competitors. Major competitors of Chanel, for example, used successful digital channels to offer products to end-customers throughout the epidemic. It is suggested that the organisation implement an information system that can provide customers with a personalised and engaging experience. To resolve the existing condition of business issues, it is critical for the organisation to incorporate advanced technology into its organizational processes in order to capture market share. The company’s existing state business challenges and implications can be remedied by upgrading their e-commerce website that is integrated with new scalable technologies such as AI, Big Data, Machine Learning, and analytics. The company also must optimize their product line and revaluate their core value proposition for new age luxury customers.

Literature Review

People have always been fascinated by stories, which are more easily remembered than facts. Well-told brand stories appear to have the potential to influence consumers' brand experiences which is "conceptualized as sensations, feelings, cognitions, and behavioral responses evoked by brand-related stimuli that are part of a brand's design and identity, packaging, communications, and environments" (Brakus et al, 2009 , p. 52). Story telling in a digital world is one of the effective ways to enables conversations between the brand and consumers. Chanel takes the advantage of digital marketing to communicate with their consumers via their website and social media the core value of the brand: Designer, visionary, artist, Gabrielle 'Coco' Chanel reinvented fashion by transcending its conventions, creating an uncomplicated luxury that changed women's lives forever. She followed no rules, epitomizing the very modern values of freedom, passion and feminine elegance (Chanel, 2005). For instance, the short film "Once Upon A Time..." by Karl Lagerfeld reveals Chanel's rebellious personality while showcasing her unique tailoring approach and use of unusual fabrics. Inside Chanel presenting a chronology of its history, how they transform from evolve from hats O fashion and became a leading luxury brand. No doubt Chanel has done an excellent job at narrating its culture, values, and identity, but the contents are mostly based on stories created by marketers or on the life of Coco Chanel. The brand image is static and homogeneous, and it is more like one-way communication, consumers cannot interact or participate in the brand's narrative.

Social media is more likely to serve as a source of information for luxury brands than as tool for relationship management. (Riley & Lacroix, 2003) Chanel was the most popular luxury brand on social media worldwide in April 2021, based on to the combined number of followers on their Facebook, Instagram, Twitter, and YouTube pages, with an audience of 81.4 million subscribers. (Statista, 2021) Chanel, as a prestigious a luxury brand, is taking an exclusive, even arrogant, stance on social media. It gives the audience multiple ways to access the valuable content they created while keeping them away from the comments generated by the content. The reasoning behind this approach is that Chanel wants to maintain consistency with their brand values and identity, which are associated with elegance, luxury, quality, detail attention, and a less-is-more approach. Nevertheless, social media can be a powerful tool that provide social data to better understand and engage customers, gain market insights, and deliver better customer service and build stronger customer relationships.

However, despite having the most social media followers, Chanel has the lowest Instagram interaction rate compared to Louis Vuitton, Gucci and Dior. Marketer and researcher increase social media marketing success rate by engaging with audience and consumers in real-time and collect audiences' big data for academic investigation. Put it in another way, social media engagement results in sales. It is imperative for in Chanel to not O just observe this model from afar, but actively challenge themselves to take advantage of it. To maintain their leadership In the luxury brand market, they must keep up with the constant changes in the digital world and marketplace and be more engaging with their audiences.

Chanel revenue has dropped dramatically from $12,273m to $10,108m (-17.6%) in 2020 due to the global pandemic where international travel has been suspended, boutique and manufacturing networks has been closed (Chanel, 2021). The pandemic has resulted in surge in e-commerce and accelerated digital transformation, hence, many of the luxury fashion brands pivot their business from retail to e-commerce, this includes Chanel's competitor Gucci and Dior. Chanel is gradually to adapting to digital strategy and selling their products online, but this is only for perfume and beauty products. President of Chanel Fashion and Chanel SAS, Bruno Pavlovsky said: "Today, e-commerce is a few clicks and products that are flat on a screen. There's no experience. No matter how hard we work, no matter how much we at look at what we can do, the experience is not at the level of what we want to offer our clients." (L. Guibault, 2021) In 2018, Chanel Fashion entered a cooperation with Farfetch purely for the purpose of developing initiatives to enhance in-store consumer experiences, they insist to incorporate human interaction and a personal touch when it comes to fashion sales. Experts foresee the pandemic could end 2022 but Covid may never completely vanish, and the life will never be same again. Consumer behaviour has changed during Covid, it will not follow a linear curve. Consumers will surge in e-commerce, reduce shopping frequency, and shift to stores closer to home. (S. Kohli et al, 2020) It is important to enhance digital engagement, but e-commerce is essential to maintain sales. It might not have a substantial impact to Chanel fashion sales in the past two year, but this will change with the advent of a new luxury consumer that wants high-quality personalised experiences offline and online. Chanel needs to adapt fast and demonstrate their trustworthiness by providing superior buying experience, exceptional customer service, and one one connections in store and on e-commerce platform.

Recommended Solution

1. Deliver the company culture using a more efficient strategy

The culture, value and the identity of Chanel is mainly from Coco Chanel. Although this is impressive, it is not attractive enough now for the newly emerging market. Chanel needs to deliver their unique culture in a more effective way. For example, Chanel could launch a campaign for all the customers to pay tribute to Coco Chanel. The customers could send their design to Chanel, of which they think is the most representative style of Coco Chanel. This could encourage more customers to be curious about the culture and stories behind this brand, instead of telling the story in a one-way communication. Especially during such an information-boosting time, the unique long-time culture is not that useful to attract more customers. unless it is used in a way that suits with the current purchasing habits of customers. According to Robert R (2006), it's wiser to create value with the customers instead of using customers, converting them from defectors to fans is more likely to happen when they are bonded with this brand. Moreover, Chanel used to focus more on the in-site retail experience, which might be part of their culture, since Chanel is a traditional luxury brand. However, people are more used to online shopping nowadays, and this is the trend. Chanel needs to invest more on the online service to exhibit their culture and adapt to the current habits of the consumers. The website of Chanel is fancy, with nice colors and visuals, but it's almost impossible for a customer to find out what they are looking for. The stylish website cannot be converted directly into revenue, they should make their website more user-friendly and functional. This is not hard for such a huge company if they realize this issue.

2. Bond with the customers

Chanel used have the largest number of followers on social media but fall behind Gucci and LV's in the past few years, because they pushed to much content without enough interactions. Chanel needs to create more bond with their existing customers and potential customers. The communication between Chanel and the customers seems to be one-way in the past. Consumers receive the messages from Chanel whereas have no channel to explain what they think about the brand and what they need from the brand. Therefore, Chanel should build a closer a relationship with their customers through social media. The reasons why using social media as a channel are as follow. a Firstly, this is a more cost-effective method to get accessed to a huge market. Chanel could let more people know about their changing and the newest product through it. They could also post different advertising to different selected customer base. Secondly, social media establish a platform where Chanel could listen to the real need of the customers. Many customers think they need a platform to let the brand know what they need and hope to witness the changes from the brand (Grant L, 2014). A successful brand should let the customers believe that what they think matters, although there IS no need CO adapt all the preference of the customers, Chanel needs to show their attitude that the company treasure the relationship with their customers. Finally, failing to use social media platform could lead to huge loss of the market. While other brands are posting advertisements and communicating with customers. they are stealing the customers from Chanel. Chanel needs to take the same weapon to defense. In conclusion, to let the customers engaged into the project and communications with the brand could assist with establishing a long-term relationship with customers and increase the loyalty of them.

3. Optimize the product line of the online store

Ecommerce market has been increasing amazingly in the past few years, especially because of the Covid, people are more used to online shopping. Therefore. Chanel needs to optimize their product line of the online store. bring their fashion line online and meet more demand of their customers. Although the offline shopping experience of luxury brands has significant value, to provide an extra choice could also be impressive. Because customers are more informed and demanding the brand to solve their problem and deliver unforgettable shopping experience. There is one field that Chanel could invest IS the VR / AR fitting room. There might be some reasons that customers cannot go to the retail shop or there is no suitable size of the product. A VR / AR fitting room enable the customers to try various products online to choose their favorite one. It could be more efficient since they could do it anywhere and anytime they want. At the same time, if they do not mind sharing the detailed information, the VR fitting room could generate a model for the client, and it is more visualized. This could increase the shopping experience and attract more potential customers. On the other hand, Chanel could give different levels of permit for different customer base. This could help to keep the company culture to provide the best service for high-net-worth clients. People could increase their customer level by building up the purchase history. In summary, to bring a unique online shopping experience to customers could enable Chanel to take up more market and establish a better platform for further development.

Conclusion

This report studied the case of Chanel and analyzed the problems which they were suffering from. It studied all the issues that were present in their organization and found that they had lost their unique value proposition along the way and also lagged behind in Social Media as well as web presence. Moreover, the firm's existing Commerce platform has a lot of weaknesses that have a negative impact on the company's core continuity as well as market survival. Accordingly after a careful analysis, few strategies were suggested so that the company can fix their social media, their digital presence and how they target the new breed of luxury customers of today.

References

.png)

.png)

DATA4300 Data security and Ethics Report Sample

Part A: Introduction and use on monetisation

- Introduce the idea of monetisation.

- Describe how it is being used in by the company you chose.

- Explain how it is providing benefit for the business you chose.

Part B: Ethical, privacy and legal issues

- Research and highlight possible threats to customer privacy and possible ethical and legal issues arising from the monetisation process.

- Provide one organisation which could provide legal or ethical advice.

Part C: GVV and code of conduct

- Now suppose that you are working for the company you chose as your case study. You observe that one of your colleagues is doing something novel for the company, however at the

same time taking advantage of the monetisation for themself. You want to report the misconduct. Describe how giving voice to values can help you in this situation.

- Research the idea of a code of conduct and explain how it could provide clarity in this situation.

Part D: References and structure

- Include a minimum of five references

- Use the Harvard referencing style

- Use appropriate headings and paragraphs

Solution

Introduction and use of Monetization

Idea of Monetization

According to McKinsey & Co., the most successful and fastest-growing firms have embraced data monetization as well as made it an integral component of their strategy. There are two ways one can sell direct access to the data to 3rd parties through direct data monetization. There are two ways to sell it: one can either sell the accrued data in its raw form, or one can sell it all in the form of analysis as well as insights. Data for assignment help can help one find out how to get in touch with their customers and learn about their habits so that one can increase sales. It is possible to identify where as well as how to cut costs, avoid risk, and increase operational efficiency using data. (For the given case, the chosen industry is Social Media (Faroukhi et al., 2020).

How it is being used in the chosen organization

In order for Facebook to monetize its user data, they must first amass a large number of data points. This includes information on who we communicate with, what we consume and react to, as well as which websites and apps we visit outside of Facebook. Many additional data points are collected by Facebook beyond these (Mehta et al., 2021). Because of the predictive potential of ml algorithms, companies can accomplish that even if users don't explicitly reveal it themselves. The intelligence gathered based on behavioural tracking done is the essence of what is sold to their customers (Child and Starcher, 2016). Facebook generates 98 percent of its income from advertising which is how their data is put to use.

Providing benefits to the organization chosen

Facebook's clients (advertisers and companies, not users) receive a plethora of advantages. Advertising may target certain groups of people based on this information and change the message based about what actually works with them. Over ten million businesses, mostly small ones, make use of Facebook's advertising platform. As a result of the Facebook Ad platform, they are able to present targeted consumers the advertising, as well as provide thorough performance data on how various campaigns including different visuals performed (Gilbert, 2018).

Ethical, Privacy and legal Issues

Threats to consumers

According to reports, Facebook has been well-known for using cookies, social plug-ins, and pixels to monitor users as well as non-users of Fb. Even if users don't have a Facebook account, they aren't safe from this research because there are a slew of other data sources that may be used in place of Facebook. It's also possible to monitor non-members who haven't joined Facebook by visiting any website that features the Facebook logo. In addition to "cookies," web beacons were one of the numerous kinds of internet tracking that may be employed across websites, and then these entries could be sold to relevant stakeholders.As a result, target voters might discover reinforcing messages on a wide range of sites without understanding that they are the only ones receiving such communications, nor are they given cautions that these are political campaign ads.Furthermore, governments throughout Europe and north America are increasingly requesting Facebook to hand up user data to assist them investigate crimes, establish motivations, confirm or refute alibis and uncover conversations. The word "fighting terrorism" has become a catch-all phrase that has lost its meaning over time. According to Facebook, this policy is referred to as, "We may also share information when we have a good faith belief it is necessary to prevent fraud or other illegal activity, [or] to prevent imminent bodily harm [...] This may include sharing information with other companies, lawyers, courts, or other government entities."(Facebook, 2021). In essence, privacy is mandated only on face value whereas the data is exposed to both Facebook, 3rd party advertisers and Government.

IAPP can help with the privacy situation

International Association for the Protection of Personal Information (IAPP) is a global leader in privacy, fostering discussions, debates, and collaboration among major industry stakeholders. They help professionals and organisations understand the intricacies of the growing environment as well as how to identify and handle privacy problems while providing tools for practitioners to improve and progress their careers (CPO, 2021). International Association of Professionals in Personal Information Security provides networking, education, and certification for privacy professionals. The International Association for the Protection of Personal Information (IAPP) can play a role in promoting the need for skilled privacy specialists to satisfy the growing expectations of businesses and organisations that manage data.

GVV and Code of Conduct

Fictional scenario

For the sake of a fictionalised context, I would assume that I was employed by Facebook. Accordingly, my colleague in this same fictionalised setting is invading privacy of businesses in a particular domain and collecting proprietary information based on the data collected and then selling it off to the competitors of that business in the same domain. There are indeed a lot of grey areas to contemplate and traverse when it comes to dealing with these kinds of tricky situations, and managing them professionally and without overreacting is essential. The most critical thing for myself would have been to figure out what is genuine ethical problem what is just something I don't like before I get involved. If my concerns are well-founded and the possible breach is significant, I'd next ask myself two fundamental questions: I can proceed if both of the following questions are answered with a resounding "yes.”

Next, when someone is working for a publicly traded and that being a significantly large company, there should be defined regulations and processes to follow whenever one detects an unlawful or unethical violation. In the internal employee compliance manual, one ought to be able to find these. Further ahead, I'll decide whether to notify their supervisor. If that person is also complicit in the event, then next alternative would be to inform the reporting manager or compliance officer. Also, if I choose note be involved in the investigation or reporting,I can either report anonymously or mention the superiors that I would not like to be named.

Reference list

.png)

ISYS1003 Cybersecurity Management Report Sample

Task Description

Since your previous two major milestones were delivered you have grown more confident in the CISO chair, and the Norman Joe organisation has continued to experience great success and extraordinary growth due to an increased demand in e-commerce and online shopping in COVID-19.

The company has now formalised an acquisition of a specialised “research and development” (R&D) group specialising in e-commerce software development. The entity is a small but very successful software start-up. However, it is infamous for its very “flexible” work practices and you have some concerns about its security.

As a result of this development in your company, you decide you will prepare and plan to conduct a penetration test (pentest) of the newly acquired business. As a knowledgeable CISO you wish to initially save costs by conduct the initial pentest yourself. You will need to formulate a plan based on some industry standard steps.

Based on the advice by Chief Information Officer (CIO) and Chief Information Security Officer (CISO) the Board has concluded that they should ensure that the key services such as the web portal should be able to recover from major incidents in less than 20 minutes while other services can be up and running in less than 1 hour. In case of a disaster, they should be able to have the Web portal and payroll system fully functional in less than 2 days.

Requirements:

1. Carefully read the Case Study scenario document. You may use information provided in the case study but do not simply just copy and paste information.

2. This will result in poor grades. Well researched and high-quality external content will be necessary for you to succeed in this assignment.

3. Align all Assignment 3 items to those in previous assignments as the next stage of a comprehensive Cyber Security Management program.

You need to perform a vulnerability assessment and Business Impact Analysis (BIA) exercise:

1. Perform vulnerability assessment and testing to assess a fictional business Information system.

2. Perform BIA in the given scenario.

3. Communicate the results to the management.

Solution

Introduction

Another name of one test is a penetration test and this type of test is used for checking exploitable vulnerabilities that are used for cyber-attacks [20]. The main reason for using penetration tests is to give security to any organization. For assignment help This test shows the way to examine the policies are secure or not [14]. This type of test is very much effective for any organization and the demand for penetration tests is increasing day by day.

Proposed analytical process

A penetration test is very much effective for securing any type of website [1]. Five steps are connected with pentest. The name steps are planning, scanning, gaining access, maintaining process, and analysis.

Pentest is based on different types of processes. Five steps are involved in pentest [2]. The first step shows the planning of pentest, the second step describes the scanning process, the third step is about gaining access, the fourth step and five steps ate about maintaining and analyzing the process.

There are five types of methods that are used for penetration testing and the name of the methods are NIST, ISSAF, PTES, OWASP, and OSSTMM. In this segment, open web application security project or OWASPO is used [3]. The main reason for selecting this type of method is that it helps recognize vulnerabilities in mobile and web applications and to discover flaws in development practices [15]. This type of test performs as a tester and it rate risks that help save time. Different types of box testing are used in pentest. The black box testing is used when the internal structure of any application is completely unknown [16,17]. White test is used when the internal process of working instruction is known and a gray structure is used when the tester can understand partially the internal working structure [13].

Ethical Considerations

The penetration test is used to find malicious content, risks, flows, and vulnerabilities [4]. It helps to increase the confidence of the company and there are different types of process that helps to increase the productivity and the performance of the company. The data that are used may be restored with the help of a pen test.

Resources Required

The name of hardware components that are used for performing ten tests is a port scanner, password checker, and many more [5]. The names of the software that are used for the penetration test are zmap, hashcat, PowerShell-suite, and many more.

Time frame

This framework has a huge user community and there are no articles, techniques, and technologies are used for this type of testing. The OWASP process is time-saving so it is helpful in every step [19].

.png)

Question 3.1

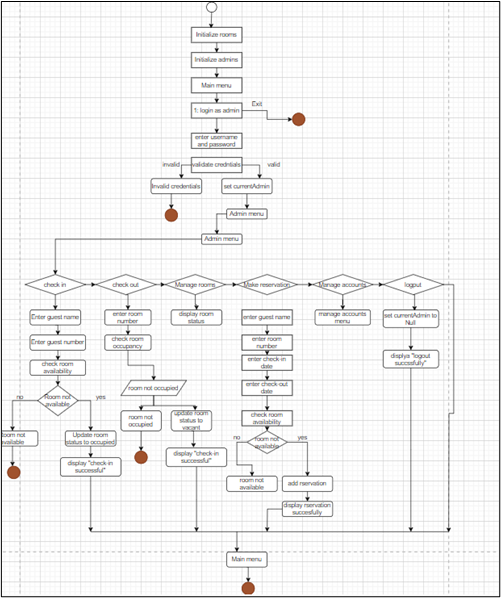

1. Analysis of Norman Joe before the BIA implementation

Business impact analysis is the process of identifying and evaluating different types of potential effects [19]. These potential effects can be in different fields and this is helpful to overcome all of the range requirements for business purposes [6]. The main aspect of pentest to secure all of the web and mobile is to provide and identify the main weakness or the vulnerabilities of the business management system from being the victim of major reputation and financial losses. To ensure the continuity of the business, regular checking and penetration testing is very important for the company [12].BIA is a very important process for Norman Joe, before implementing the BIA Norman Joe has many security issues, and the company is also required to improve the firewall in their network system as well as the IDS [11]. As the firewalls are only developed to prevent attacks from the outside of the network, the attacks from inside the network can easily harm the network and damage the workflow [7]. The company requires to implement internal firewalls to prevent such attacks. Firewalls also can be overloaded by DDos protocol attacks, for this type of attack the company requires to implement scrubbing services [16].

.png)

Figure 1: Before implantation of BIA for penetration testing

2. Analysis of Norman Joe after the BIA implementation

The process of business impact analysishas been done on the Norman Joe to secure the Company's system and after implementing the security measures such as the internal firewalls and the scrubbing services, the company’s data has been secure mostly from cyber security threats [8]. After implementing the BIA, the website has been tested by running the website, the website has first started and then intercept of the website has been done [10].

.png)

Figure 2: After implantation of BIA for penetration testing

After the intercept process it has been checked if the website is being used or not [11], if the website is not being used it allows the user to remain in the start page of the website and if the website is being used the protocols are being found and checked if it was used or using then the information are gathered and performed the penetration test in the system [9]. Furthermore, the report of the penetration analysis has been displayed after the test as well as the vulnerability level then the analysis has been finished.

Reference List

.png)

.png)

COMP1680 Cloud Computing Coursework Report Sample

Detailed Specification

This Coursework is to be completed individually Parallel processing using cloud computing

The company you work for is looking at investing in a new system for running parallel code, both MPI and Open MP. They are considering either using a cloud computing platform or purchasing their own HPC equipment. You are required to write a short report analyzing the different platforms and detailing your recommendations. The report will go to both the Head of IT and the Head of Finance and so the report should be aimed at a non-specialist audience. Assume the company is a medium sized consultancy with around 50 consultants, who will likely utilize an average of 800 CPU hours each a month. Your report should include:

1) A definition of cloud computing and how it can be beneficial.

2) An analysis of the advantages and disadvantages of the different commercial platforms over a traditional HPC.

3) A cost analysis, assume any on site HPC will likely need dedicated IT support

4) Your recommendations to the company.

5) References

Solution

Introduction

The report is focusing to help the company to invest in a new system so that the company can run parallel code for OpenMP and MPI. The company is looking forward to use a cloud computing platform or to purchase separate HPC equipment for the company. At the very beginning of the report, the meaning of cloud computing is mentioned. In the same part, the definition of cloud computing is also mentioned. For Assignment Help All of the possible ways how cloud computing is useful and can it benefit a company are also written in the report. The report shows how the High-performance Computing act and how other commercial platform works. A comparison of both platforms has been done in the report. In the next section of the report, an analysis has been done about HPC and different commercial platforms. It has stated the different advantages and disadvantages of using different commercial platforms over the traditional HPC. All the points that show why the company should go with the particular platform have been highlighted in the given report. In the next section of the report, cost analysis has been mentioned. The cost analysis is done on the basis of an assumption made. A site has been assumed that the HPC will likely need dedicated IT support. After analyzing all these points, some of the recommendations to the company have been given. The recommendation that is given to the company will help the company to choose whether it should go with High-Performance Computing or it should choose a commercial platform. It will give the company an idea to invest in the new system for running parallel code. At the end of the report, a short conclusion has also been mentioned that summarizes each point presented in the report in short form.

Definition of Cloud Computing

Cloud computing is referred to as the delivery of service on demand like storage of data, computing power, and computer system resources. The delivery of services using internet, databases, networking, data storage, software, and servers. is termed cloud computing. The name of this on-demand availability is cloud computing because the information and data accessed by it are found remotely in the virtual space or in the cloud. All the heavy lifting or activities involved in the processing or crunching of the data in the computer device are removed by cloud computing (Al-Hujran et al. 2018). Cloud computing moves away from all the work to huge computer clusters very far away in cyberspace. The definition of cloud computing is, it is a general term for anything that involved delivering hosted service with the help of the internet. Both the software and hardware components are involved in the cloud infrastructure and these are required for implementing the proper cloud computing model. Cloud computing can be thought of in a different way such as on-demand computing or utility computing. Hence, in short, it can be said that cloud computing is the delivery of resources of information technology with the use of the internet (Arunarani et al. 2019).

Following are the points that show how cloud computing can be beneficial:

Agility- Easy access to a large number of technologies is given by the cloud so that the user can develop anything that the user has ever imagined. All the available resources can be quickly spined up to deliver the desired result from infrastructure services such as storage, database, computer, IoT, data lakes, analytics, machine learning, and many more. All the technologies services can be deployed in a matter of seconds and the ways to implement various magnitude. With the help of cloud computing, a company can test several new ideas and experiments to make differentiation of customer experiences, and it can transform the business too (Kollolu 2020).

Elasticity- With the presence of cloud computing in any business enterprise, the system become capable to adapt all the changes related to workload by deprovisioning and provisioning in autonomic manner. With cloud computing, these resources can diminished and can be maximize it instantly or to shrink the capacity of the business.

Security- Security of the data is one thing that almost every organization is concerned about. But, with the help of cloud computing, one can keep all the data and information very private and safe (Younge et al. 2017). A cloud host carefully monitors the security that is more important than a conventional in-house system. In cloud computing, a user can set different security according to the need.

Quality control- In a cloud-based system, a user can keep all the documents in a single format and in a single place. Data consistency, avoidance of human error, and risk of data attack can be avoided in cloud computing. If the data and information are recorded in the cloud-based system then it will show a clear record of the updates or any revisions made in the data. On the other hand, if the data are being kept in silos then there will be a chance of saving the documents in different versions that can lead to diluted data and confusion.

Analysis of Different Platforms vs HPC

HPC stands for High-Performance Computing is the ability to perform complex calculations and process data at a very high speed. The supercomputer is one of the best-known solutions for high-performance computing. It consists of a large number of computer nodes that work together at the same time to complete one or more tasks. This processing of multiple computers at a time is called parallel processing. Compute, network and storage are the three components of HPC solution. In general terms, to aggregate all the computing powers in such a way that it could deliver high performance than one could get out of a typical desktop is determined as HPC. Besides, having so many advantages, it has some disadvantages also. The following points show the analysis of the advantages and disadvantages of the different commercial platforms over a traditional HPC.

The advantage of different platforms over a traditional HPC is as follows:-

- With the perspective of the cost of equipment of high-performance computing, it seems to have been very high. The cost of using the high-performance computing cluster is not fixed and varies according to the type of cloud instances that the user uses to create the HPC cluster. If the cluster is needed for a very short time, the user uses on-demand instances for creating the cluster after the creation of the cluster, the instances are needed to be deleted (Tabrizchi and Rafsanjani 2020). This cost is almost five times higher than that of the local cluster. But when moving to the other platform of cloud computing, reduces the cost of managing and maintaining of IT system. in the different platforms, there is no need to buy any equipment for the business. The user can reduce the cost of using the cloud computing service provider resources. This is one of the benefits of other platforms over traditional HPC.

- In the different platforms of cloud computing rather than traditional HPC, cloud computing permits the user to be more flexible in their performance and work practices. For instance, the user is able to access the data from home, commute to and from the work, or on holiday. If the user needs access to data in case the user is off-site, the user can connect to the data anytime very easily, and quickly. In contrast, there is no such case with traditional HPC. In the traditional HPC, the user has to reach the system to access the data. In this HPC cloud, it is difficult to move the data that are used in HPC (Varghese and Buyya 2018).

- Having separate clusters in the cloud in the data centers poses some security challenges. But in the other platform of cloud computing, there is no such risk associated with data. One can keep and store the data in the system safely and privately in the case of other platforms. The user does not need to worry in any situation such as natural disaster, power failure or in any difficulties, the data are stored very safely in the system. This is another advantage of other platforms over traditional HPC (Mamun et al. 2021).

Disadvantages of other platforms over traditional HPC are as follows:

- The cloud, in any other setup, has the chance to experience some of the technical problems like network outages, downtimes, and reboots. These activities created troubles for the business by incapacitating the business operations and processes and it leads to damaging of business.

- In the several other platforms of cloud computing rather than HPC, the cloud service provider monitors own and manages the cloud infrastructure. The customer doesn't get complete access to it and has the least of the control over the cloud infrastructure. There is no option to get access to some of the administrative tasks such as managing firmware, updating firmware, or accessing the server shell.

- Not all the features are found in the platforms of cloud computing (Bidgoli 2018). Every cloud services are different from each other. There are some cloud providers that tend to provide limited versions and there are some cloud providers that offer only the popular features so the user does not get every customization or feature according to the need.

- Another disadvantage of cloud computing platforms over high-performance computing is that all the information and data are being handed over by the user while moving the services to the cloud. For all the companies that have an in-house IT staff, they are not able to handle the issues on their own (Namasudra 2021).

- Downtime is one of the disadvantages of cloud-based service. There are some experts that have considered downtime as the biggest cons of cloud computing. It is very well known that cloud computing is based on the internet and so there is always the chance of service outrage due to unfortunate reasons (Kumar 2018).

- All the components that remain online in cloud computing that exposes potential vulnerabilities. There are so many companies that have suffered from severe data attacks and security breaches.

The above sections deal with the analyses of other commercial factors over traditional higher performance computing.

Cost Analysis

It is very important to know how the cloud provider sets the prices of the services. A team of cost analytics has been referred by the company so that the team can calculate the total cost that the company has to endure to set the cloud-based platform. The team will decide which cost is to be taken into consideration and which is to be eliminated while calculating the cost. In the cost analysis site, HPC will need dedicated IT support. Network, compute, and storage are the three different cost centers of the cloud environment. Below are the points that show the cost analysis of the cloud service and an idea has been provided about how the cloud providers decide how much to charge from the user.

Network- While setting the price of the service, the expenses to maintain the network are determined by the cloud providers. The expenses of maintaining the network include calculation of the costs for maintaining network infrastructure, the cost of network hardware, and the labor cost. All these costs are summed up by the provider and the result is divided by the rack units needed by the business for the Infrastructure as a Service cloud (Netto et al. 2018).

- Maintainance of network infrastructure- the cost of security tools, like firewalls, patch panels, LAN switching, load balancers, uplinks, and routings are included in this. It covers all the infrastructure that helps the network to run smoothly.

- Cost of network hardware- every service provider needs to make its investment in some type of network hardware. The providers buy hardware and charge the depreciation cost over the device lifecycle

- Labor cost- labor cost includes the cost of maintaining, monitoring, and managing the troubleshoot cloud computing infrastructure (Zhou et al. 2017).

Compute- every business enterprises have their own requirement that includes CPU. Cost of CPU is calculated by the service providers by making an determination of the cost of per GB RAM endured by the company.

- Hardware acquisition- hardware acquisition computation stated the cost of acquiring hardware for every GB of RAM that the user will use. Depreciation of the cost is also made here over the lifecycle of the hardware.

- Hardware operation- total cost of the RAM is considered by the provider in the public cloud and then the cost per rack unit of the hardware divide it. This cost includes subscription costs based on usage and licensing.

Storage- storage costs are as same as the compute cost. In this, the service provider finds out what is the cost of operating the storage hardware and to get the new hardware as per the storage need of the users (Thoman et al. 2018).

Recommendations

As the company is looking forward to investing in the new system for running parallel codes, some of the recommendations to the company are mentioned in the below section. These recommendations will help the company to decide in which of the system, it needs to make its investment so that the company can run parallel codes smoothly. On the basis of analyses done in the above part of the report, it can be said that the company should go with the different platforms available in cloud computing. In the traditional HPC, there are lots of barriers to the users like high cost, the problem of moving and storing the data, and many more. It is not like cloud computing is all set for the company to use and so the following are the recommendations for the company that can enhance all the other platforms of cloud computing:

Recommendations to minimize planned downtime in the cloud environment:

- The company should design the new system's device services with disaster recovery and high availability. A disaster implemented plan should be defined and implemented in line with the objectives of the company that provides the recovery points objectives and lowest possible recovery time.

- The company should leverage the different zones that are provided by the cloud vendors. To get the service of high tolerance, it is recommended to the company consider different region deployments attached to automated failover in order to ensure the continuation of the business.

- Dedicated connectivity should be implemented like AWS Direct Connect, Partner Interconnect, Google Cloud’s Dedicated Interconnect as they provide the dedicated connection of network between the user and the point of cloud service. This will help the business to exposure the risk of business interruption from the public internet.

Recommendations to the company with respect to the security of data:

- The company is recommended to understand the shared responsibility model of cloud providers. Security should be implemented in every step of the deployment. The company should know who holds access to each resource and data of the company and is required to limit the access to those who are least privileged.

- It is recommended to the company implement multiple authentications for all the accounts that provide the access to systems and sensitive data. The company should turn on every possible encryption. A risk-based strategy should be adopted by the company that secured all the assets assisted in the cloud and extended the security to the devices.

Recommendations to the company to reduce the cost of the new cloud-based system:

- The company should get ensured about the presence of options of UP and DOWN.

- If the usage of the company is minimum, then it should take the advantage of pre-pay and reserved instances. The company can also automate the process to stop and start the instances so that it can save money when the system is not in use. To track cloud spending, the company can also create an alert.

Recommendations to the company to maintain flexibility in the system:

- The company should consider a cloud provider so that it can take help for implementing, supporting, and running the cloud services. It is also necessary to understand the responsibilities of the vendor in the shared responsibility model in order to reduce the chance of error and omission.

- The company must understand the SLA as it concerns the services and infrastructure that the company is going to use and before developing the new system the company should understand all the impacts that will fall on the existing customers.

Following all the above-mentioned recommendations, the company can decide how to and where to invest in the development of the new system.

Conclusion

The report was prepared with the goal to help the company to decide on which system should it make the investment in. The company has two options, either it can use a cloud computing platform or it can purchase all the equipment of HPC. In this report, the meaning of cloud computing has been explained very well. Not only the meaning of cloud computing, but it has also focused on the benefits of cloud computing in any business organization. In simple term, the delivery of a product or service with the use of the internet is called cloud computing. In the report, an analysis has been made regarding both of the systems. The advantages and disadvantages of the other platforms over the platform of high-performance computing are included in the analysis so that it can be easy to decide where the company should make its investment. For the comparison, different bases are taken as the cost of the system, security of data, control over the access, and many more. A structure of cost analysis has also been presented in the report so that company can imagine how much cost it has to invest in the new system. some of the recommendations to the company are also given. The company is recommended to choose the cloud computing platform as it is very secure and the cost of setting up the system is lower in comparison to others.

References

.png)

.png)

MIS607 Cybersecurity- MITIGATION PLAN FOR THREAT REPORT SAMPLE

Task Summary

Reflecting on your initial report (A2), the organisation has decided to continue to employ you for the next phase: risk analysis and development of the mitigation plan.

The organisation has become aware that the Australia Government (AG) has developed strict privacy requirements for business. The company wishes you to produce a brief summary of these based on real- world Australian government requirements (similar to how you used real-world information in A2 for the real-world attack).

These include the Australian Privacy Policies (APPs) especially the requirements on notifiable data breaches. The APP wants you to examine these requirements and advise them on their legal requirements. Also ensure that your threat list includes attacks on customer data breaches. The company wishes to know if the GDPR applies to them. The word count for this assessment is 2,500 words (±10%), not counting tables or figures. Tables and figures must be captioned (labelled) and referred to by caption. Caution: Items without a caption may be treated as if they are not in the report. Be careful not to use up word count discussing cybersecurity basics. This is not an exercise in summarizing your class notes, and such material will not count towards marks. You can cover theory outside the classes.

Requirements

Assessment 3 (A3) is in many ways a continuation of A2. You will start with the threat list from A2, although feel free to make changes to the threat list if it is not suitable for A3. You may need to include threats related to privacy concerns. Beginning with the threat list:

- You need to align threats/vulnerabilities, as much as possible, with controls.

- Perform a risk analysis and determine controls to be employed.

- Combine the controls into a project of mitigation.

- Give advice on the need for ongoing cybersecurity, after your main mitigation steps.

Note:

- You must use the risk matrix approach covered in classes. Remember risk = likelihood x consequence.

- You should show evidence of gathering data on likelihood, and consequence, for each threat identified. You should briefly explain how this was done.

- At least one of the risks must be so trivial and/or expensive to control that you decide not to use it (in other words, in this case, accept the risk). At least one of the risks, but obviously not all.

- Provide cost estimates for the controls, including policy or training controls. You can make up these values but try to justify at least one of the costs (if possible, use links to justify costs).

Solution

Introduction

A mitigation plan is a method where has a risk factored that helps to progress action and various options. Therefore, it also helps to provide opportunities and decreases the threat factors to project objectives. In the section, the researcher is going to discuss threat analysis using matrix methods, threats and controls also mitigation schemes. For Assignment Help, thread model refers to a structural representation of the collected data based on the application security. Essentially, it is a perception of different applications as well as their environment in terms of security. On the other hand, it can be said that the thread model is a process of structure that mainly focused on the potential scheme of the security of threats as well as vulnerabilities. Apart from that, the threat model includes the quality of seriousness of each thread that is identified in this industry. Besides that, it also ensures the particular techniques which can be used for mitigating these issues or threads. Threat modeling has several significant steps which must be followed for mitigating the threads in cybercrimes.

Body of the Report

Threat Analysis

The threat is a system that is generally used for determining the components of the systems. There have highly needed to protect data and various types of security threats. The threat analysis is affected to identify information and several physical assets of different organizations. The organization should understand the powerful threats as organizational assets that enhance the mitigation plan for threat reports (Dias et al. 2019).

The various organizations determine the effects of economical losses using qualitative and quantitative threat analysis. The threat analysis assures potential readiness which has a crucial risk factor to process any project. There have some important steps in threat analysis such as recognizing the cause of risk factors or threats. After that, categorize the threats and make a profile that is community-based. The third step is determining the weaknesses after that makes some scenarios along with applying them. Finally, it is making a plan for emergency cases.

Threat analysis is mainly followed by risk matrix concepts for carrying forwarding the mitigation plan for a research report. There have four types of mitigation strategies such as acceptance, transformation, limitation, and risk factor avoidances (Allodi & Massacci, 2017).

.png)

Table 1: Risk matrix methods

(Source: Self-created)

Cyber Hacking