Reports

HI5030 System Analysis and Design Report Sample

This assessment relates to the unit learning outcomes as in the Unit of Study Guide. This assessment is designed to give students skills to explore the latest system analysis and design trends, challenges, and future directions.

In this individual research paper, you will explore a contemporary issue in systems analysis and design, integrating academic research with practical application. The assignment is designed to deepen your understanding of the selected issue and its real-world impact on business information systems. You will critically analyse the issue, apply relevant methodologies to a real-world or hypothetical scenario, and propose solutions to address the challenges it presents.

1. Issue Selection:

- What You’ll Do: Choose a contemporary issue from the list provided by the unit coordinator. Some examples of issues you might consider include:

o Agile vs. Waterfall Methodologies: The challenges and benefits of agile methodologies compared to traditional waterfall approaches in system development.

o Data Privacy and Security: How emerging privacy regulations, such as GDPR, impact system design and the challenges of ensuring compliance while maintaining system efficiency.

o Integration of AI in System Design: The opportunities and challenges of incorporating artificial intelligence into business information systems, including issues related to data quality, bias, and explainability.

o Cloud-Based Systems vs. On-Premises Systems: The considerations and trade-offs involved in choosing cloud-based solutions over traditional on-premises systems, including security, cost, and scalability.

o User-Centred Design: The importance of user experience (UX) in systems analysis and design, and the challenges of balancing user needs with technical requirements.

2. Research and Practical Application:

- What You’ll Do: Start by conducting a literature review on your chosen issue. Look for academic articles, industry reports, and case studies that discuss the issue in depth. Then, apply the insights from your research to a practical scenario. For example:

o Agile vs. Waterfall Methodologies: After reviewing the strengths and weaknesses of both approaches, you could apply them to a project management scenario in a software development company. You might create a prototype or design document showing how an agile approach could be adapted to a traditionally waterfall project, highlighting the potential benefits and challenges.

o Data Privacy and Security: If your issue is data privacy, you could apply your research to a company that needs to redesign its information systems to comply with GDPR. This could involve proposing specific changes to data storage and processing practices and demonstrating how these changes would be implemented.

3. Impact and Solution Analysis:

• What You’ll Do: Analyse how the selected issue impacts the design and implementation of business 1 information systems in your scenario. Discuss how the issue affects system functionality, data management, and the communication of requirements to stakeholders. Then, propose practical solutions or methodologies that could address these challenges. For example:

o Agile vs. Waterfall Methodologies: Your analysis might reveal that while agile offers greater flexibility, it may be less effective in highly regulated industries where detailed documentation is required. You could propose a hybrid approach that incorporates the flexibility of agile with the structure of waterfall.

o Integration of AI in System Design: You might identify that the use of AI introduces new challenges in data quality and bias. To address this, you could propose a framework for regular audits of AI systems to ensure they are fair and transparent.

4. Documentation and Communication:

• What You’ll Do: Compile your findings, analysis, and proposed solutions into a well-organized research paper. Your paper should clearly articulate both the theoretical research and the practical application. Ensure your writing is accessible to both technical and non-technical audiences, using clear explanations and supporting your arguments with evidence from your research and application.

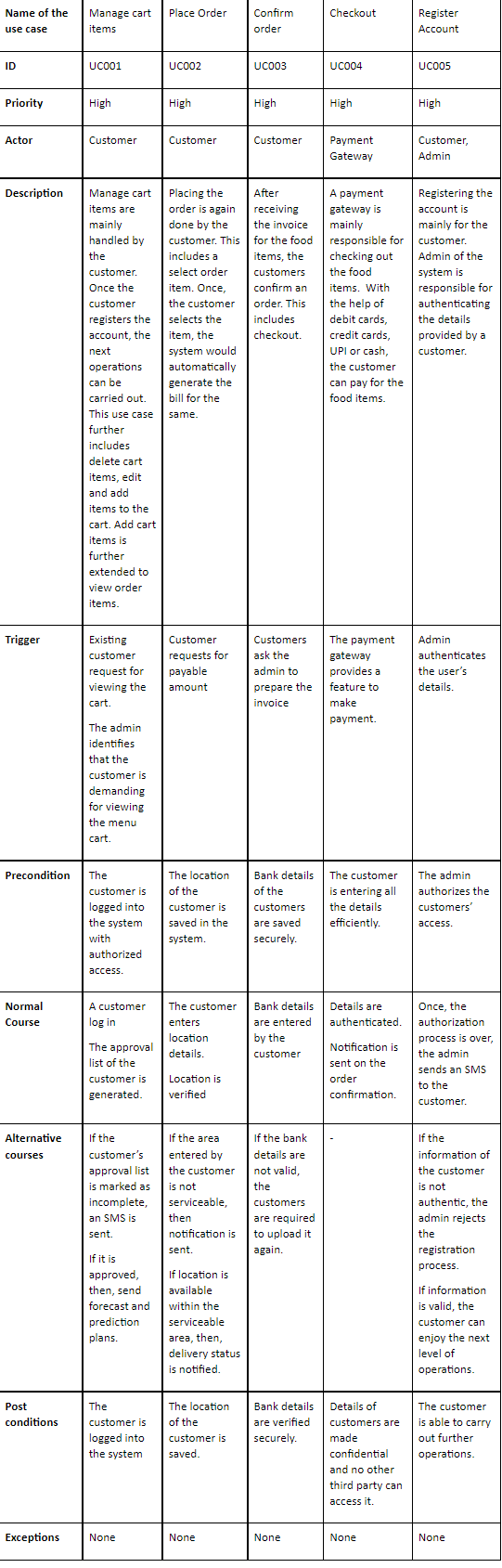

Solution

Introduction

Background

The rapid expansion in digital platforms has forced data security and privacy to become integral in modern-day processes. With private information becoming an asset, governments and regulating bodies have formulated strong policies such as GDPR in an attempt to secure information regarding users from misuse, unauthorized access, and information leakages. GDPR compels a strong collection of requirements such as transparency in processing, granting users high levels of information control, and having strong security controls in place (Sharma, 2019, p5-33). Organizations must re-engineer system approaches in compliance with such requirements in a manner that maintains efficiency in the system intact.

Purpose

This report for the Assignment Help aims to examine how GDPR affects system design, such as its challenge for organizations in achieving compliance at a cost not incurring a loss in system performance. It involves an in-depth analysis of methodologies and approaches companies can use in harmonizing information systems with GDPR compliance at a level not compromise operational agility.

Scope

The study addresses information systems in financial services and in e-commerce whose operations entail working with sensitive information regarding users at a fundamental level. Analysis entails compliance issues, impact on system structures, and proposed solutions in terms of using privacy-preserving technology and automation.

Structure

The report is organized into key sections, beginning with an analysis of GDPR and similar legislation, followed by a careful analysis of compliance barriers and ramifications for commercial infrastructure, and then a real-world application case study to present actual compliance methodologies, culminating in proposed methodologies and an implementation schedule for enhancing security for information and compliance with legislation.

Literature Review

Theoretical Background

Major GDPR compliance concepts are privacy-by-design, data minimization, encryption, and lawful processing of data. Privacy-by-design ensures that data protective measures are included in system development at an early stage and not appended later. Data minimization entails collecting data that is pertinent to a certain purpose, reducing exposure to security threats. Encryption secures stored and in-transit data, and access to them in an unauthorized way is difficult. Lawful processing of data ensures openness in processing an individual's data and for a certain purpose defined under GDPR. Kamocki and Witt, (2020) state that compliance is enhanced through privacy-by-design principles, through inclusion of security in system architecture, and reducing security breaches (Kamocki, and Witt, 2020, p3423-3427). Daniel, 2022 further identifies that secure processing and encryption are vital considerations in the protection of users' information, and in reducing exposure to cybersecurity threats (Daniel, 2022, p33-43).

Current Research

Recent studies have concentrated specifically on GDPR's function in redefining information security architectures. Research identifies added expenses and complexity, particularly for SMEs, with compliance. Most companies have a problem with interpreting GDPR requirements, and therefore, have an ad hoc form of compliance. According to studies, GDPR encourages increased trust in consumers, but full compliance is challenging with changing requirements and technological development. Höglund, (2019) believes that blockchain technology can deliver secure, unalterable transaction logs in supporting GDPR compliance, particularly in terms of controlling data (Höglund, 2019, p12-32). Carroll et al., in 2023 refer to using AI for compliance automation, with companies being capable of tracking and controlling user information and reducing human intervention and mistakes (Carroll et al., 2023, p1-19).

Relevance to Business Information Systems

Compliance needs to shape business systems through an impact on database structure, access controls, and authentication processes for users. GDPR necessitates that companies implement strong access controls in a move to stop unauthorized access to sensitive information. Companies must also maintain thorough logs of processing activity, and therefore, must modify database administration systems. Rules for the right to be forgotten and data portability require companies to implement processes through which users can request deletion of information or move information to new service providers. Business information systems must also include real-time tracking tools to detect information breaches and honor GDPR’s 72-hour breach reporting requirement. According to MBULA, (2019), companies that fail to comply with these requirements face significant penalties, and compliance is a key concern for system architects (MBULA, 2019, p1-7). In addition, studies conducted by Höglund (2019) have determined that GDPR compliance is driving companies to redesign their system architectures with enhanced security capabilities, including biometric authentication and sophisticated algorithms for encryptions (Höglund, 2019, p12-32).

Analysis of the Issue

Description of the Issue

GDPR compliance involves several technical, operational, and legal complexities. Organizations must maintain security for information, manage consent, and have effective breach response processes in position. Having to maintain compliance and yet preserve efficiency in the system involves additional complications. Organizations must redesign systems in a way that incorporates privacy-related controls in a manner that doesn't affect operations. Regulatory bodies have severe penalties for non-compliance, and compliance is, therefore, significant in terms of financial and reputational loss avoidance. Besides, GDPR involves proactive security for information, with continuous updating and tracking in terms of emerging vulnerabilities and changing legislation and laws (Tamburri, 2020, p1-14).

Impact on Business Information Systems

Companies must re-engineer information systems to:

- Implement encrypted data and secure access protocols in compliance with GDPR security standards, such as end-to-end encryption, secure key management, and complex authentication processes to resist unauthorized access to information (Pookandy, 2020, p19-32).

- Ensure compliance with the rights of users over information, such as erasure and portability. Organizations must have processes in place through which deletion and moving of information can be requested in terms of GDPR’s principle of right-to-be-forgotten.

- Develop real-time auditing capabilities in a manner that will enable companies to monitor and log all processing activity for data, for transparency and for compliance with regulators to verify compliance.

- Introduce role-based access controls to limit access to sensitive information based on job roles. Individual datasets can only have access for approved staff, and through this, security and data vulnerabilities will be reduced to a minimum.

- Conduct regular compliance audits and risk assessments to assess potential vulnerabilities in business information systems and apply corrective actions in anticipation of breaches taking place.

Comparison of Methodologies/Approaches

- Traditional security methodologies focus first and foremost on perimeter security, such as anti-malware and firewalls, but lack in GDPR compliance through an insufficiency of deeper security processes for safeguarding information. Traditional methodologies provide a baseline level of security, but not one capable of supporting modern compliance frameworks with a focus on safeguarding information at all processing stages.

- Privacy-enhancing technologies (e.g., homomorphic encryption) allow for increased compliance through computations over encrypted data, not in its plaintext state. In such a way, private information is preserved even during processing, but such techniques require high computational powers, with increased operational costs and system overheads (Hall, 2024, p39-46).

- Hybrid approaches integrate AI-facilitated compliance monitoring with traditional security methods. AI-facilitated compliance tools can monitor compliance with policies, detect outliers, and manage risks with effectiveness. Hybrid approaches, through a combination of traditional security methodologies and emerging techniques for protecting privacy, integrate compliance and system performance harmoniously. Organizations can utilize AI-facilitated auditing tools to scan high volumes of information in real time, and compliance can be guaranteed with reduced intervention.

Practical Application

Scenario Description

A multinational e-commerce retailer will have to re-engineer its information management system in compliance with GDPR legislation. The retailer processes enormous amounts of information about its customers, including payment details, individual identifiers, and web browsing behavior. GDPR compliance will require significant changes in collecting, storing, and accessing information in a way that doesn't affect operational efficiency in any manner. Embedding security controls in a way that doesn't impair user experience will challenge the retailer, and a careful balancing act between compliance and agility in operations will be a necessity.

The introduction of new technology such as artificial intelligence (AI) and machine learning (ML) brings opportunity and challenge together. AI not only will enhance security for information but will require a high level of governance for ethics compliance. Cross-border transmissions of information will have to be handled, and with them, additional legal requirements will become applicable. In this case study, an optimized compliance path with GDPR and at the same time not compromising efficiency in operations will be exhibited.

Application of Methodologies

Automated Consent Management Systems: Implementing automated consent management tools keeps users in control of collecting and processing information about them. Automated consent management tools make it easier for users to opt in and withdraw consent at any stage, in compliance with GDPR requirements. Automated tracking of consent reduces administration and lessens the risk of non-compliance through having a current record of a user's preference (Chhetri et al., 2022, p.2763(5)).

Multi-Factor Authentication (MFA): The adoption of multi-factor authentication (MFA) fortifies security for information through a multi-step access-granting verification process. By utilizing biometric authentication, one-time passwords (OTPs), and hardware tokens, MFA reduces unauthorized access with high-security protocols in position for safeguarding sensitive information about a user in case of future hacks and cyber attacks (Lawson, 2023, p1-13).

Data Retention Policies: Developing robust data retention policies assists in deleting unnecessary and outdated information automatically in a manner that adheres to GDPR requirements. Having an automatic mechanism for deleting information lessens vulnerability to over-storage of information, and subsequently lessens vulnerability to a data breach. Policies also allow companies to have effective use of storage and compliance with requirements for minimizing information under legislation.

Real-Time Compliance Monitoring: Integrating real-time compliance tracking tools helps companies monitor processing activity and label suspected violations in real-time. AI-powered analysis tells companies about processing activity for specific users, and companies can actively correct compliance failures in real-time even when no failure actually occurred.

Incident Response and Breach Notification Protocols: Establishing structured incident reporting and breach notification processes helps in minimizing loss in case of a breach. GDPR mandates organisations to report to regulators in 72 hours when a breach is discovered. Automated incident reporting and incident resolution processes enable rapid incident identification, containment, resolution, compliance, and trust with stakeholders (Obanla and Sapozhnikov, 2019, p12-20).

Outcome

The redesigned system vastly improves security for the data, with increased compliance with GDPR and operational effectiveness for the organization. Automated consent management enables transparency and trust with the users, and multi-factor authentication strengthens access controls. Data retention policies minimize unnecessary storing of data, safeguarding against future legal repercussions. Real-time compliance checking reduces compliance-related danger, with ongoing compliance with developing standards. AI and ML integration fortifies security for the data and enables real-time danger detection and response. Automated reporting processes streamline GDPR compliance audits, with reduced administration involved.

By balancing security, compliance, and operational efficiency, the organization achieves an expandable and flexible information management infrastructure. In the long-term, the enhanced system enables a safer, user-centric information environment for both the organisation and its clients. Implementation of AI-powered security and compliance tools not only ensures GDPR compliance but puts the organization at an edge in terms of ethical information processing and cybersecurity solidity.

Proposed Solutions

Solution Description

To address GDPR compliance in an effective manner, companies must undertake a multi-faceted approach comprising encryption, governance, and compliance automation.

Encryption and access controls: Secure information at rest and in transit with robust encryption algorithms like AES-256. Implement multi-dimensional authentication processes, such as biometric and multi-factor authentication (MFA), to safeguard access (Iavich et al., 2024, p70-78).

Data Governance Framework: Adopt a documented data governance model with role-based access controls (RBAC), real-time activity logging, and automated audit trails for compliance with GDPR transparency and accountability requirements (Nookala, 2024, p2-18).

Automated Compliance Monitoring: Install AI-based compliance monitoring software that periodically audits system logs and data transactions for suspected compliance failures. Automated software can detect unapproved access, label non-compliant data storage, and generate real-time alerts for security professionals

Justification

Implementing these solutions strengthens security for data and ensures efficiency in the system. Encryption keeps even unauthorized access at bay, and yet, keeps the information in an uninterpretable and secure state. Having a strong data governance model reduces the chance of any kind of human errors, and with that, helps companies comply with regulatory requirements with ease. Automated compliance checking reduces the burden of constant checking, allowing companies to detect and correct any compliance-related issues.

Implementation Plan

- Assessment: Conduct a complete GDPR compliance review to detect security and governance vulnerabilities, evaluate processing risks for your data, and confirm compliance with applicable regulations.

- Development: Modify system infrastructure with strengthened encryption techniques, role-based access controls (RBAC), and compliance tools for securing information.

- Testing: Perform in-depth security tests, including penetration testing, system audits, and acceptance tests, to validate protective controls

- Deployment: Implement compliance in phased phases, with a minimum level of operational disruption and opportunity for iterative refinement and system optimizations.

- Monitoring: Establish continuous compliance tracking with AI-facilitated analysis, conduct regular audits, and apply real-time risk management methodologies to assure GDPR compliance and system integrity.

Conclusion

GDPR has initiated a new era in system development with its imposition of strong data protection and prioritization of anonymity for users. Organizations must operate in an environment with a complex scheme of regulation that will require them to integrate privacy-by-design methodologies, secure information processing, and real-time transactional observation for compliance. Compliance poses such challenges as implementation complexity, uncertainty in legislation, and performance compromises, but proactive techniques such as encryption, role-based access controls, and governance automation can harmonize system efficiency with compliance requirements effectively. Besides, companies have to review security and compliance methodologies in a continuous manner regularly in an ongoing search for countering new emerging threats and changing compliance requirements in a long-term basis.

As businesses increasingly depend on digital infrastructure, security for information has become a part of system planning. Organizations must integrate privacy as a principle in planning, such that security controls have no impact on operational efficiency. Trends in technology enriched with privacy, AI-powered compliance tracking, and blockchain-powered tracking of information can make GDPR compliance easier to manage. With these emerging trends, frameworks for safeguarding information can become efficient and allow companies to maintain agility and responsiveness at the same time. As awareness regarding information privacy keeps growing, companies prioritizing compliance can gain a competitive edge through trust and a demonstration of a desire to safeguard individual information. Overall proactive and meticulous compliance will not only allow companies to manage risks but will make them overall cybersecurity strong.

Recommendations

Actionable Steps

To ensure long-term GDPR compliance and future emerging legislation regarding data privacy, companies must act in anticipation in fortifying security frameworks:

- Regularly update security policies in accordance with developing laws and legislation, with a view to revising them every six months and including best practice in the field.

- Implement privacy-enhancing techniques such as homomorphic encryption and differential privacy in an effort to mitigate vulnerabilities in data exposure and maintain usability for operations and analysis.

- Conduct periodic audits, both internal and external, to detect vulnerabilities and evaluate system resilience.

- Employ automated compliance tools for real-time deviation in policies and taking immediate corrective actions.

Best Practices

- Adopt a security-first development at every stage of software and system development, with security controls incorporated at each stage

- Establish transparent data processing policies to inform users about how their information is collected, stored, and processed, in a manner that will instill trust in regulators

- Train employees in GDPR compliance and best practice, instilling a culture of awareness and accountability.

Reference

.png)

Reports

HI5033 Database Design Report Sample

Assignment Description

This assessment requires individual completion. Students are expected to complete a critique and conduct a literature review to discuss a contemporary issue relating to Database Systems and identify appropriate approaches to address this issue. The topic is “Evaluating the Impact of Indexing Techniques on Query Performance in Relational Databases”.

Each student is required to search the literature and find a minimum of ten (10) academic research papers (references) related to this topic. Subsequently, the student must critically analyse the selected references and provide an in-depth discussion on how they reflect the topic. This assessment is worth 40% of the unit’s grade and is a major assessment. Students are advised to begin working on this assessment as soon as possible.

Deliverable Description

You need to submit the final version of your assignment in Week 12. The structure of the final report should include a cover page and 5 sections as follows:

1. Introduction

State the purpose and objectives of the report, present an overview of the topic, and outline the scope of your analysis.

2. Literature Review

Summarize key findings from at least ten academic papers, highlighting relevant theories, methodologies, and results. Identify major trends, gaps, and areas of consensus or disagreement within the literature.

3. Discussion

Critically analyse the references reviewed in the literature review. Discuss how these sources contribute to the understanding of database optimisation, examining their relevance, strengths, and limitations.

4. Conclusion

Summarize your findings, emphasizing key insights from the literature review and discussion.

5. References

Provide a comprehensive list of all references cited in your report, formatted according to the Adapted Harvard Referencing style.

Solution

Introduction

The purpose of the report is to understand the perceptions in the existing literature related to the impact of the indexing technique and query performance in a relational. The main objective of this review is to understand the concept in detail and to know the implications of different aspects, techniques, models, and theories related to indexing and query that are associated with database performance and advancement. Indexing technique and query performance are a significant part of relation and database because they choose the effectiveness and feasibility of different databases for the Assignment Help in responding to challenges and advancement areas. In this respect, the nature of changes in indexing and query performance shows the evolution of databases. The scope of the analysis of literature will show different areas of query management as well as indexing techniques that are focused on the database application as well as developed by different researchers as well as real-time implementations. The scope is also to understand those areas that create advancement in the different databases.

Literature Review

Gadde (2021) opines that artificial intelligence helps reduce downtime and increase the accuracy of relational databases. The discussion shows an investigation of the understanding of leveraging artificial intelligence for improvement and enhancement of efficiency by minimising down time in relational database systems. It is seen that alignment of both traditional and modern proactive theoretical solutions of maintenance seems effective in providing the result. Historical data were reviewed on certain metrics, leading to the understanding that a random forest-based predictive model accuracy in maintenance and performance. It is there for the fact that the AI-driven predictive model as a trend enhances the database management system is gaining momentum.

Ali et al. (2022) argue that techniques like compact data structure and multi-query Optimisation are seen to be effective in enhancing data quality performance within the system. That discussion shows a review of techniques and systems that help contribute to the RDF query graph. The focus is on identifying the growth of RDF in terms of graph-based models that provide a theoretical understanding of challenges and KPIs. In terms of gaining efficiency graph partitioning in distributed systems shows more accuracy than local systems in scalability. The discussion is based on reviewing and examining the results of 135 RDF/SPARQL engines and 12 benchmarks. The discussion shows Limited knowledge about scalability in RDF and multi-query Optimisation as a concept.

Han et al. (2021) highlight that ML-based methods help to enhance the performance of the traditional query execution process. The discussion creates an understanding of the role of different estimation cardinality estimation methods to improve the functioning of the Quadri Optimisation in relational databases. The research focuses on understanding a model with respect to theoretical approaches of DBMS that helps in comparing traditional methods with modern machine learning-based approaches. The study shows different new aspects in the machine learning data driven method like P-error instead of E error that helps in improving the functionalities and feasibility of a system. The methods include the establishment of new benchmarks for real-world databases and the evaluation of multiple cardinality estimation methods. The finding shows that machine learning will create a modernised trend of shifting from traditional to modern query Optimisation models. There is inadequate information about the concept of the real world in this context.

Kossmann, Papenbrock, and Naumann (2022) view that dependence on data improves query optimisation. The discussion provides results of the survey in which different types of data-dependent processes are reviewed in query Optimisation in a relational database system. Rule-based and cost-based Optimisation approaches are considered for the review of the model in terms of theoretical understanding. Findings show that refinement of the execution plan, computation reduction and enhancement of indexing strategies showed improvement in enquiry Optimisation who data dependencies. A review of the survey is adopted as a methodology to gain insights. Trends indicate that there is increased research on the advanced metadata for query Optimisation. The low implementation rate of data-dependent processes in real practice limits knowledge and focus in the discussion.

Hosen et al. (2024) highlight that a new model or query language can enhance the abilities of the query. The discussion focuses on creating a new query language and a model for temporal graph data to understand its effectiveness in creating efficiency. Graph database principles are considered to identify the utilisation and features of the model. The finding shows that the model is capable of increasing the feasibility and efficiency of both real-world and synthetic data sets. The discussion indicates a significant growing interest in temporal data graphs and progressive query language. There is limited historical data about the temporal paragraph in the existing query model that can be compared through the discussion.

Pan, Wang, & Li (2024) argue that vector data management shows challenges in indexing and hybrid query execution. The discussion shows in-depth research on vector database management systems to outline its key challenges and future scope in research and techniques. Theoretical concepts like embedding-based retrieval, data management principles and similarities search are focused on and considered for gaining relevant insights. The finding highlights different categories and emerging techniques of vector database management systems including all its visible challenges. Models with unstructured data or large databases are growing rapidly facilitating the need for an advanced approach of vector database management in the coming time. Gaps in studies of hybrid query or Optimisation techniques are found within the discussion.

Wang et al. (2021) opine that Milvus is a vector-based data management system capable of managing high-dimensional data. Discussion highlights a specific with the database management model to show its competence and effectiveness in responding to high dimensional data of Artificial Intelligence and databased applications. Theoretical attributes and principles of system architecture have been focused on understanding the need for this model in data query management. The results indicate that Milvus is looking to outperform an existing database system by offering advanced functionalities. An extensive benchmark is set for evaluation of the specific vector database management system to compare it with multiple other database systems. Future trend shows increasing use of Artificial Intelligence and data science facilitates the need for a high performance vector database management system. Limitations of studies are seen in understanding hybrid data query processing.

Liu et al. (2021) view that error reduction and logical operation show effective query execution on knowledge graphs. The purpose of the discussion is to identify ways through which the execution in the knowledge graph can be enhanced. The mention of neutral embedding-based methods is done to identify gaps in the traditional approaches. The finding reveals that the proposed method is seen to enhance accuracy and efficiency in the query management of a knowledge graph. The methodology involves proposing a modern that can address embedding entities and queries in a vector space. The trend highlighted is a shift from rule based model to deep learning approaches. Limitation of discussion is seen in identifying the management of complex queries associated with various nodes.

Trummer et al. (2021) point out that the neural approach improves performances in various software analysis applications. The discussion sheds light on neural software analysis, in which approaches are exploded to enhance the efficiency and performance of systems. Finding show that neural approaches are effective in creating systematic functioning of analysis software Complex code patterns leading to enhancement of the existing process. The methods involve conducting training on the neural networks on large codebases. A major trend is seen in the adoption of machine learning in software engineering for code analysis. Gaps in the study are found in understanding the consequences of integrating modern neural analysis with traditional ones in terms of efficiency and flexibility.

Wang et al. (2022) opine that V-chain+ is an effective model that improves query performance more than existing systems. The study aims to explore the Boolean range of queries and their effectiveness in the blockchain database. Findings show that the proposed model in the research has significantly addressed mitigating gaps in the existing system and increasing efficiency enquiry performance within the database. A sliding window accumulator and an objective registration index are designed as part of the method to conduct this research. The trend shows that there is increasing integration of index techniques with the blockchain for data retrieval. Gaps of knowledge are seen in inefficiency and prayer solutions to the challenges used to Complex query management.

Khan et al. (2023) point out that a NoSQL database is better than SQL in managing large volumes of unstructured data. The discussion focuses on a comparative assessment of two types of data: NoSQL and SQL. The outcome shows that NoSQL by Scheme with less structure and horizontal scalability, responds effectively and better than SQL databases to manage Complex data. The methodology adopted for this purpose is a systematic review of literature With a definite number of sample data. The growing trend is seen in the adoption of NoSQL databases for big data applications and Systems. The study shows a gap in providing effective solutions to issues like interoperability and data portability.

Cao et al. (2021) state that a native cloud-based database is capable of quick responses to adverse situations or failures. The study aims to explore in native cloud-based database in terms of its application for aggravated data centres to improve efficiency, availability and performance. Resource disaggregation theory provides a strong theoretical framework to identify aspects related to the New database designing. The finding suggests that the specific database is helpful in failure recovery faster in practice. Methods like optimistic locking, index-aware prefetching, etc, are used for creating the design of the proposed model. Trends show growing interest in serverless and aggravated databases to respond to scalability and cost-effectiveness. An understanding of the scalability gap is found in studies within the traditional and existing databases.

Fotache et al. (2023) opine that data masking has minimal impact on query improvement. The discussion explored the role of performance penalty in dynamic data masking in SQL databases focusing on Oracle. Privacy by design model and security theories are considered to identify different principles and aspects related to data masking in SQL databases. The results show that dynamic determine asking has a weak influence on query management in an SQL database. Methods include query analysis, machine learning models, etc. The future growing interest in the alignment of security and compliance in databases holds a significant part in the discussion. The discussion provides less focus on the Cost performance of dynamic data masking in Real-world scenarios.

Panda, Dash & Jena, (2021) highlights that Particle Swarm Optimisation increases efficiency of the query execution. The purpose of the study is to optimise the query response time of blockchain by applying evolutionary algorithms. Blockchain indexing, cryptographic security theories helps to understand the framework more dominantly and significantly. Finding show that PSO reduce computational costs within systems. The adoption of artificial intelligence in the optimisation of blockchain databases is a major trend. The limitations of the study are seen in identifying the role of artificial intelligence in the centralised system in terms of query response management.

Wu & Cong (2021) state that deep autoregressive models provide better accuracy and improve cardinality estimation in databases. The discussion provides a new proposed model for improving estimation cardinality in a database. Gumbel Software trick and deep learning techniques provide a theoretical framework for understanding the requirement of the proposed database. The results show a reduction in error and an improvement in efficiency in workload shifts. The study is done using a hybrid training model. Trends in the discussion is seen in growing AI Adoption in cardinality estimation within a database. The gap is seen in identifying further Optimisation techniques.

Discussion

Considering the literature review, one of the major aspects that is considered as a strength focus on advancement related to query execution or management is the adoption of artificial intelligence. In most of the review of discussion artificial intelligence is found to be effective in handsome database system by reduction of error down time and and enhancement of scalability efficiency and performance. Artificial intelligence enhances the performance of a query in a database system proficiently (Panda et al., 2021). In terms of understanding future scope, it is also seen that the growing interest and adoption of artificial intelligence is the need for more advanced and data-driven models for queries and indexing. This growing interest also has led to different new model proposals, as seen in the existing literature. The second positive aspect that comes out of the literature review is the focus on creating models that reflect the alignment of both the traditional and modern forms, focusing on that theoretical foundation and creating a progressive approach for relational database query management. Deep learning-based models are seen to Breach the gap between data-driven and query-driven approaches. It simply means that all of the focus in proposing new models and techniques identifies strengths of the traditional approaches and modern technology in terms of mitigating the gaps and challenges. This shows are very potential nature of Advanced indexing techniques as well as query management in relational databases.

Major weakness or gaps seen in the existing model is limitations of knowledge related to advanced Optimisation or Technology implementation for making a traditional database efficient. Specific models are seen to be proposed for mitigating only specifications like blockchain. There is a Limited number of knowledge in identifying a specific solution that can address multiple databases and their challenges. In this respect, there is a need for more future research on areas right real-time analysis of computational cost neural network, etc, to understand the appearance of relational database query management in the coming time. Despite growing demand, the discussion shows certain limitations of AI in indexing and query management. There is less focus on real-time query Optimisation, which reflects the scalability of a database. It is to be noted that artificial intelligence means more explanation in terms of application to real-world scenarios. Database complexities are also growing with time and creating different unrecognisable patterns of data constructed data that create obstacles for the user to perform efficiently. In this respect, future research on AI applications in the area of query analysis and indexing needs to be focused more on real business case studies.

The sources used for the literature review collectively show that database Optimisation is a significant part of adjusting for future challenges and opportunities. Performance enhancement is the primary purpose for this Optimisation, for which integration with artificial intelligence hybrid or cloud-based approaches and security management needs to be focused and prioritised.

Conclusion

To conclude, it can be said that query Optimisation is one of the significant aspects that continuously changes with the advancement of Technology and the changing nature of the different database systems. So, most of the articles provide an understanding of square Optimisation and different models and techniques that are relevant to creating a positive impact on performance. It is also to be noted that the discussion shows an inclination to our artificial intelligence as one of the potential technology that addresses the need for database indexing and query management over a long time. That discussion highlights different types of proposed models and theoretical approaches that enhance the dimension of knowledge related to the current implications of relational databases as well as query performance and indexing approaches. This is significant in identifying the potential of the database development areas.

References

.png)

Assignment

CSE5CRM Cyber Security Risk Management Program Assignment Sample

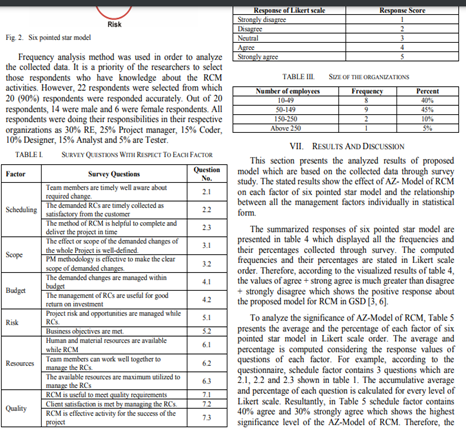

Assessment Components

Component 1: Asset Evaluation and Classification

Objective: Demonstrate the ability to identify and classify organizational assets for risk assessments.

Tasks:

1. Asset Inventory Creation:

Develop a comprehensive inventory of an organization's assets (hardware, software, data, human resources). Provide a detailed description of each asset.

2. Asset Categorization:

Categorize each asset into groups (e.g., critical, sensitive, non-critical).

Justify the categorization based on the asset's role and importance to the organization.

3. CIA Triad Evaluation:

Assess each asset based on confidentiality, integrity, and availability requirements.

Assign a classification level (e.g., high, medium, low) and justify the classification.

4. Business Impact Analysis:

Conduct a Business Impact Analysis (BIA) to determine the potential impact of asset compromise on business operations.

Classify assets according to their impact on business continuity.

5. Data Sensitivity and Value Assessment:

Evaluate the sensitivity and value of data handled by each asset.

Classify assets based on the data they manage and provide justification.

Deliverable: A report documenting the asset inventory, categorization, CIA triad evaluation, business impact analysis, and data sensitivity and value assessment.

Assessment Criteria:

- Completeness and accuracy of the asset inventory.

- Justification for asset categorization and classification.

- Depth of analysis in the BIA and data sensitivity assessment.

- Clarity and organization of the report.

Component 2: Developing Methods for Evaluating and Monitoring Cyber Risk Management

Objective: Develop and apply methods for evaluating and monitoring cyber risk management.

Tasks:

1. Qualitative Risk Assessment:

Utilize qualitative methods (e.g., expert judgment, scenario analysis) to evaluate cyber risks.

Document the process and findings.

2. Quantitative Risk Assessment:

Apply quantitative methods (e.g., Annual Loss Expectancy, Monte Carlo simulations) to quantify cyber risks.

Present the results and explain the methodology used.

3. Key Risk Indicators (KRIs):

Develop a set of KRIs to monitor the organization's risk landscape.

Explain how these KRIs will be measured and monitored.

4. Continuous Monitoring Plan:

Design a plan for continuous monitoring of cyber threats, including tools and techniques to be used (e.g., SIEM, IDS, EDR).

Explain how the monitoring plan will be implemented and maintained.

5. Periodic Risk Assessment Plan:

Develop a schedule and methodology for conducting regular risk assessments.

Outline the steps for updating the risk assessment based on new threats and vulnerabilities.

Deliverable: A comprehensive document detailing the qualitative and quantitative risk assessments, KRIs, continuous monitoring plan, and periodic risk assessment plan.

Assessment Criteria:

- Appropriateness and thoroughness of the risk assessment methods.

- Justification for the selection of KRIs.

- Feasibility and effectiveness of the continuous monitoring and periodic risk assessment plans.

- Clarity and professionalism of the document.

Component 3: Determining Cost-effective Treatments to Manage Cyber Risk

Objective: Identify and justify cost-effective treatments to manage cyber risk.

Tasks:

1. Risk Treatment Options Analysis:

Identify and analyze risk treatment options (e.g., risk avoidance, risk reduction, risk transfer, risk acceptance).

Provide examples and justifications for each option.

2. Prioritization of Security Controls:

Prioritize security controls based on their effectiveness and cost.

Use the results from the risk assessments to guide prioritization.

3. Cost-benefit Analysis:

Conduct a cost-benefit analysis for each proposed security control.

Include calculations for Return on Security Investment (ROSI) and Total Cost of Ownership (TCO).

4. Implementation Plan:

Develop a plan for implementing selected security controls.

Outline the steps, resources required, and timeline for implementation.

5. Cost-effective Security Metrics:

Propose metrics to measure the cost-effectiveness of implemented security controls.

Explain how these metrics will be monitored and reported.

Solution

Component 1: Asset Evaluation and Classification

1.1 Asset Inventory Creation

.png)

.png)

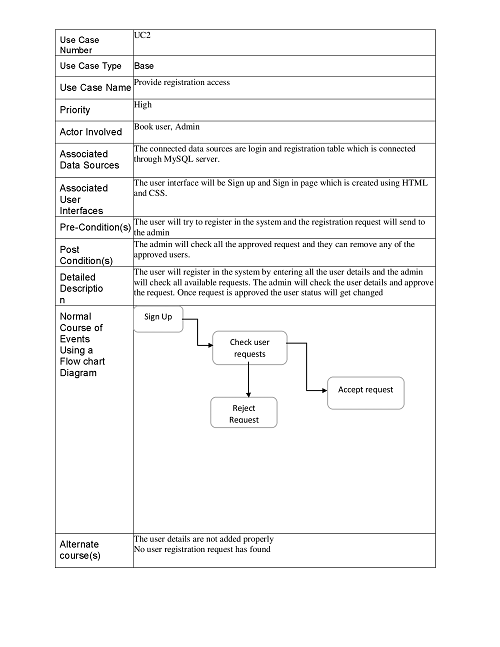

Table 1: Assets inventory

(Source: Self-created)

1.2 Asset Categorization

Critical

- Web Server

- Firewall

- ERP System

Non-critical

- Workstations

Sensitive

- CRM System

- Customer Database

1.3 CIA Triad Evaluation

.png)

Table 2: CIA Triad Evaluation

(Source: Self-created)

Justification

The risk classification levels, therefore, depend on the significance of the asset to the operations, security, and data of the organization. The firewall, IT administrator, and customer database are highly classified because their loss or compromise impacts confidentiality, integrity, and availability severely by breaching customer data, shutting down operations, and attracting penalties. Enterprise resource planning and customer relationship management, as are the systems under discussion, are considered high in importance (Fraser et al. 2021).

1.4 Business Impact Analysis

Business impact analysis (BIA) is the assessment for the Assignment Help of the effect that adverse events will have on an organization’s operations in the event of the compromise of assets. In the case of the web server, firewall, CRM system, and ERP system, if compromised, it is very devastating to business continuity. For example, the web server responsible for hosting customer applications means the absence of this resource will result in a lack of access to services, loss of trust, and consequently low revenues. It safeguards the whole network and the failure of a firewall means that the organization is vulnerable to cyber-attacks and all the other systems would be at risk (Ghadge et al. 2020).

.png)

Figure 1: Risk Assessment and Business Impact Analysis

(Source: Linkedin.com, 2024)

Also, highly sensitive assets such as the customer database and financial records will cause severe legal and financial penalties as a result of data privacy laws such as GDPR. The opportunity loss would mean the inability to make sales, customer inconvenience, and delay in responding to their needs and demand but the harm is not severe as it will entail loss in potential revenue. Despite the definite usefulness of data analysts, their non-attendance does not necessarily lead to a stop of activities (Crumpler, and Lewis, 2022).

Classification

.png)

Table 3: Assets classification

(Source: Self-created)

1.5 Data Sensitivity and Value Assessment

.png)

Table 4: Data Sensitivity and Value Assessment

(Source: Self-created)

Component 2: Developing Methods for Evaluating and Monitoring Cyber Risk Management

2.1 Qualitative Risk Assessment

The qualitative risk assessment process of the anticipated scenario opened quite valuable information for the organization’s cybersecurity risks. The analysis of possible phishing attacks showed one important conclusion, though such attacks are progressing in real-world scenarios. For instance, the recent attack, the Twitter hack of the year 2020, where the attackers used phishing techniques to gain control over the executives’ accounts, indicates the risks involving negligence when it comes to the aspect of employee training on how to identify the threats (Li, and Liu, 2021). The investigation of insider threats provided more understanding of problems related to frustrated personnel becoming criminals and exploiting their privileges. Prominent examples like the Edward Snowden case of 2016 show that insider threats can have severe consequences for organizational reputation and customer loyalty.

2.2 Quantitative Risk Assessment

Annual Loss Expectancy (ALE)

The Annual Loss Expectancy (ALE) assessment enables the measurement of loss from identified threats in the assets. First, one specifies the assets and the threats that they face, for example, loss of data, or ransomware attacks. Next, every asset is given a dollar amount attached to it. There are three main types of threats, and the threat frequency, which is expressed as the Annual Rate of Occurrence (ARO), is calculated using statistical data (Kamiya et al. 2021).

“SLE=Asset Value * Exposure Factor”

“ALE= SLE * ARO”

Findings

.png)

Table 5: Findings from quantitative analysis

(Source: Self-created)

2.3 Key Risk Indicators

Key Risk Indicators (KRIs)

- Number of Security Incidents: This KRI measures the overall cumulative count of security incidents which can be within a month or within a quarter. An increasing rate of occurrence may also point to weaknesses that may require prompt action to be taken.

- Phishing Attack Rate: This represents the number of employees who have failed the phishing simulation tests. A higher rate indicates the need for enhancing the security training and awareness programs within their organizations (Zwilling et al. 2022).

- User Access Violations: This one measures the number of attempts made by unauthorized individuals to access restricted data or information systems. In user group wise use of physical access controls, an increase in violations could be from potential insiders or lack of access control (Sarker et al. 2021).

Measurement and Monitoring of KRIs

- Data Collection: Implement organizational structures for acquiring key data for each KRI. For instance, the Security Information and Event Management (SIEM) tool logs security incidents and access attempts.

- Regular Reporting: Develop KPIs and KRIs and locate or build the respective trending charts into the systems, to provide capabilities to track changes over time. In cases of monthly or quarterly reporting, there will be improved recognition of new trends and risks (Gupta et al. 2023).

- Continuous Review: Ensure that KRIs are dynamic while conducting routine reviews on such factors in relation to changes in the risk environment and business direction. This ensures that the indicators are still meaningful and in tune with the objectives that the organization seeks to put forward (Khinvasara et al. 2023).

2.4 Continuous Monitoring Plan

.png)

Table 6: Continuous Monitoring Plan

(Source: Self-created)

2.5 Periodic Risk Assessment Plan

.png)

.png)

Table 7: Continuous Monitoring Plan

(Source: Self-created)

The process of the newly updated risk assessment has included several steps that still need to be followed to ensure cybersecurity is still adequate. First, the organizations have to gather threat intelligence by either purchasing, or getting a feed that alerts them to threats, the second part is that they have to read and be aware of different industry reports and trends.

Component 3: Determining Cost-effective Treatments to Manage Cyber Risk

3.1 Risk Treatment Options Analysis

Risk Avoidance

Risk avoidance gives direction in the disposal of do in order to erase each activity that has the possibility to put the organization at risk. For example, if relatives are aware of vulnerabilities of a certain software application that cannot be fixed to a reasonable extent, they might decide not to use it in the organization.

.png)

Figure 2: Risk treatment options

(Source: Researchgate.net, 2024)

Risk Reduction

Risk minimization is centered on achieving the lowest probabilities of occurrence of risks and their severity. For instance, an organization may employ more and sophisticated firewalls and intrusion detection systems, to strengthen a network security standard (Zeadally et al. 2020). Training common employees on cybersecurity can also reduce the instances of errors which are common causes of cyber threats.

Risk Transfer

Risk transfer means the transferring of responsibility of a risk to a third party usually through contracts and or insurance. For example, an organization may invest in cyber liability insurance for protection from losses that may occur due to hacking. The said approach enables the organization to cap its resources liability while at the same time making sure that resources for dealing with such are available.

3.2 Prioritization of Security Controls

Risk control is helpful for organizations because it assists in efforts to prioritize security controls and allocate resources in a way that will be most effective in diminishing risk. Considering security controls, it is also possible to describe a risk-based approach that takes into account not only the effectiveness of the particular control but also the costs for its implementation.

.png)

Figure 3: How to implement the types of security controls

(Source: sprinto.com, 2024)

Also, the cost of controls should also be taken into consideration by organizations. It is not reasonable to use high-cost controls at workplaces when low-cost can offer almost the same level of protection.

3.3 Cost-Benefit Analysis

Firewall

- Initial Cost: $20,000

- Annual Maintenance Cost: $3,000

- Lifespan: 5 years

Total Cost (TCO) = 20,000 + (3,000*5)

=35,000

Risk Reduced (Annual Savings) = $100,000

ROSI = 10000/3500 * 100

= 285.71%

Intrusion Detection System

- Initial Cost: $15,000

- Annual Maintenance Cost: $2,500

- Lifespan: 5 years

- Risk Reduced (Annual Savings): $75,000

Total Cost (TCO) = 15000 + 2500*5

= 27500

ROSI = 75000 / 27500 * 100

= 272.73%

Employee Training

- Initial Cost: $5,000

- Annual Maintenance Cost: $1,000

- Lifespan: 3 years

- Risk Reduced (Annual Savings): $25,000

Total Cost (TCO) = 5000 + 3*1000

= 8000

ROSI = 25000/8000 *100

= 312.5%

Based on the cost-benefit analysis, the three proposed security controls have high ROSI, and the most beneficial security control is the employee training program.

3.4 Implementation Plan

.png)

.png)

Table 8: Implementation plan

(Source: Self-created)

3.5 Cost-effective Security Metrics

Proposed Metrics to Measure

Thus, the effectiveness of the security controls implemented in an organization can be represented by a set of metrics that are listed below, which can give a proper understanding of the organization’s security. One of these is Return on Security Investment (ROSI) which measures security investment dollar returns against the dollars invested.

Monitoring and Reporting of Metrics

In order to make these metrics more effective, the organization should incorporate a proper monitoring and reporting technique in order to ensure that security controls are evaluated consistently. This serves as a basis for making the correct evaluations concerning the prevailing security situation (Khando et al. 2021).

References

Reports

MCRIT010 Innovation and Commercialisation in IT Report 3 Sample

This is a case study of a well-known international brand. Students will apply the knowledge and skills on Innovation and Commercialisation in IT, from the weekly topics to the four questions based on the case study provided.

Word Length: 2,000 words (+/- 10%)

There are four questions. Each question is of equal value that is 7 1/2% each. Therefore, the word length for each question should be approximately 500 words each, to ensure the depth of answer to each question.

Formatting: Use Arial or Calibri 12 point font. Single or 1 1⁄2 spacing. The structure of the case study report is a title page, the questions as headings and answer the questions, then a reference list page.

Questions

Question 1) Disruptive Innovation

The case study refers to After Pay as a Disruptive Innovation in the finance industry.

(a) Do you agree with the analysis, why or why not?

(b) In your answer provide a definition and description of Christiansen’s Disruptive Innovation.

(c) How might traditional banks have used Disruptive Innovation to innovate their own fintech products?

Question 2) Timing of Entry

The case identifies Pay’s first mover status in the Australian buy now pay later (BNPL) market.

(a) How was After Pay different to previous buy now pay later products in Australia, to be called a first mover?

(b) What are the advantages for After Pay to be a first mover, what are the disadvantages?

(c) Is After Pay able to maintain its competitive advantage from fast followers or do fast followers have an advantage over After Pay?

Question 3) Innovation Strategic Direction

(a) Evaluate After Pay’s strategic direction in the BNPL later fintech industry using Porter’s Five Forces.

(b) Apply the Blue Ocean Strategy Eliminate Reduce Raise Create (ERRC) grid to After Pay, to evaluate their Blue Ocean Strategy. Use this ERRC grid to draw the Strategic Canvas for After Pay with the competitors as the traditional credit card companies.

Question 4) Future of After Pay

(a) This case study is the situation for After Pay in 2020. Provide an update of After Pay’s since 2020, referring to how they performed during and after COVID-19 lockdowns in Australia.

(b) What has been the impact of recent regulation changes to the BNPL industry on After Pay?

(c) How have they continued to innovate since 2020?

Solution

Question 1 Disruptive Innovation

A) AfterPay growing as disruptive Innovation in the Finance Industry

- The case study has referred to after-pay as a disruptive innovation in the finance industry, being one of the fastest-growing innovations commercially in today's business. Definitely, the disruptive innovation in the finance industry has transformative growth in recent times also analysed in case study review informatively. Pay Touch Group has integrated new digital platforms into the Fintech Sphere allowing consumers to experience fast services (Kamuangu, 2024). The new consumer centric approach used in business has shaped constant growth of new millennial shopping preferences.

- AfterPay as a disruptive innovation in the finance industry, claims to solve key problems with highly disruptive innovation for businesses growth domains.

Disruptive innovation has constantly risen in recent times due to highly flexible demands, leading wider vision productively on constant business work parameters. AfterPay has a huge competitive profits scope with usage and implementation of disruptive innovation growth and inclusion of sustained change parameters.

B) Christensen's theory of disruptive innovation

- Christenson’s theory for The Assignment Helpline of disruptive innovation signifies process by which products or services take root in creative applications in market. The theory determines bringing less expensive and more accessible in terms of technology brings new moves upmarket displacing established competitors' pace. It signifies relentless growth with stages: disruption of incumbent, dynamic and new rapid linear evolution, appealing convergence, and complete imagination within systems rise functionally (Christensen, 2015).

- Disruptive technology in the finance industry has shaped constant growth goals technically and enlarged varied goals for businesses today. Also, the theory states untapped new constant changes with the usage of disruptive technology growth rise, wider strengths through online systems, and disruptive innovation expansion (Christensen, 2015). The BPNL segment has risen 8% within e-commerce payment, by around a three-fold increase, and the international market expecting a rise by around 22% over five years to approx. $33 billion.

C) Traditional banks have used Disruptive Innovation to innovate their own Fintech services.

- Traditional banks have used Disruptive innovation to work within their own Fintech products, as the Fintech boom is a global phenomenon with 40% of investments rising in recent times. Fintech hubs globally including Australia's new markets have emerged specifically, bringing sustained constant growth among commercial usage. The BPNL Fintech segment within Australia expanded in 2020, with high-scale innovation and new demand-oriented investments by companies specifically (Ahmed et al., 2024).

- AfterPay has become a global Fintech giant, with a clear market leader in a rising competitive segment in recent times diversely. Customers can make purchases and pay with four equal payments, which has increased rapid demand for technology-oriented systems. AfterPay slogan “There’s nothing to pay" has dragged in a varied rise in new consumers today, stating the significance of the drastic strength rise in flexible technology systems.

Question 2 Timing of entry

A) AfterPay reason for first mover advantage

- AfterPay offers many unique features in its services from other player sin fintech business within Australia, focusing on sustainable growth market criteria and delivering best competent services. It has expanded around 50% of Australian market, requiring higher customers’ ability to have their purchase now and pay later option. It also focuses on segment, that customers without high traditional interest credit are able to make new purchases and can further have four equal payment systems (Güleç et al., 2024).

- The business model of AfterPay has flipped economies of credit cards innovatively by leading constant change, incorporating new systems of credit cards with high consumer oriented strengths. Also, through this simple product offering, AfterPay is attractive to consumers as it offers one repayment reschedule and also flat fee structure on missed payment dates.

B) Advantages and disadvantages of AfterPay first mover advantage

- The advantages of AfterPay to be the first mover are many, as company has focused on providing best user experience for people who like to spend more. It can be also highlighted that consumers with more purchasing power have been major target market, with inclusion of free credit and retailers should pay more in returns to their larger transactions.

- Advantages of AfterPay are that it is a multi-sided platform business connects to larger range of consumers, millennial using credit cards within growing market scenarios. Millennials in Australia are the most competitively growing strong market, that are rising with new purchasing power for receiving goods (Wang et al., 2024). The company boasts of new 86 million consumers and a network of 200000 merchants, rising commercial goodwill.

- However, some disadvantages are there such as AfterPay 20% of revenue comes from its late fees, signifying the company earns more from reprocessing which increases dependability. Pay shares faced a decline of 4.7% major per cent in share value after the governance firm, which has brought enlarged change dynamically. There is an error in technology, which has led to a rise in concerns regarding changing access to new pay later debts criteria dynamically.

C) AfterPay maintaining competitive advantage

- AfterPay is unable to maintain a competitive advantage from fast followers, as there is a build-up of headwinds for the company. There has been a recent striking deal with us retail giants in 2021, leading to a resurgence of share prices and varied change substantially. The company is making substantial losses, with the average revenue ratio hovering around 50 and, the technical valuation of the share price being overpriced (Ferraro et al., 2024).

- Also, AfterPay has rumours of regulation, dampening its future prospects. There are also rising global competitors entering the market all the time, thus increasing external risks for business. Investors have been impatient with lowered returns from company revenue levels, thus being highly risk for future business goals. The company has intensely disrupted the accuracy and validity of data, sidestepping credit obligations and wider loopholes raising financial stress and potentially causing losses.

Question 3 Innovative Strategic Direction

A) Porter Five Forces model

Analysis of in BNPL Later Fintech industry forces impacting AfterPay using the Porter Five Forces model will focus on identifying the company's further new business goals competitively. The major factors of the five models are as follows:

- Competitive rivalry within the industry: The BNPL market has been found to be valued at USD 30.38 billion in 2023 analysis, the market is projected to grow to rise of 37.38 in 2024 and a CAGR of 20.7% during the recent period. There is a high threat for AfterPay Company, as new fintech companies are raising revenue within BPNL for expanding best standards in consumer spending habits (W. Van Alstyne, 2016).

- Threat of new entrants: There is also a high threat of new entrants for the company, as Klama Swedish BNPL Company backed by Alibaba provides high competition within the lucrative European market. The Karma solutions company has been rising among consumers, merchants with new lucrative growing opportunities within market systematically. The entry of PayPal in 2020 has also been a threat to rapid expansion in the US within three months, raising business challenges for AfterPay.

- Threat of substitutes: BPNL players are rising today with new creative business models and higher interest towards faster consumer services exponentially. Consumers have new substitutes, thus there is medium threat of this factor for AfterPay business.

- Threat of suppliers’ power: There is less threat of suppliers, as automation and fast supply chain management sustainable factors are focused by company (Vynogradova et al., 2024).

- Threat of consumers’ power: Medium threats to this factor, as consumers have a preference for AfterPay services due to highly innovative and dynamic services.

Also consumers today further have many new choices too, which increases competition for businesses to largely focus on wider dimensions.

Therefore, a review has been informative regarding changing BNPL market domains competitively growing in market scenarios. It has also integrated new channels extensively, ideally focused on core mechanisms stating constant innovation with fintech.

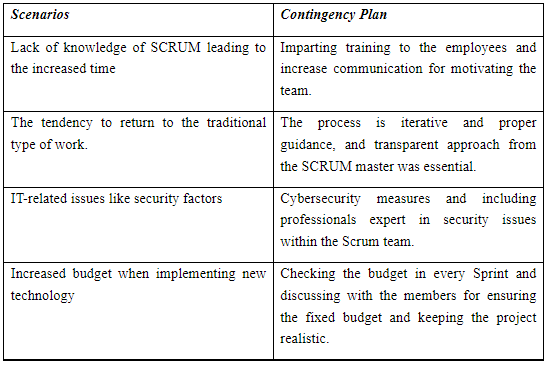

B) ERRC model of blue ocean strategy

The ERRC model of Blue Ocean's strategy will focus on reducing AfterPay and will evaluate company performance with competitors as traditional credit card companies. Eliminate- reduce-raise-create grid is an essential innovative matrix tool driving companies to focus on eliminating errors and creating new blue ocean development.

.png)

.png)

Figure 1: ERRC graph, 2024

The ERRC graph has focused on identifying major dimensions on new growing and fluctuating changes rising in business of After Play, it has presented major values on larger values towards diverse changing market perceptions. ERRC graphical representation has also established focus on range, complexity, and changing demands.

- Interest rates and fees will be eliminated by company

- Traditional credit checks in reduce section, as it will curb down old technical flaws and loopholes in system

- Merchant benefits and customer convenience in raise section, are major parameters that will be raised and developed

- Instalment payment in create section, important for After pay to install new changes

There has to be a focus developed on eliminating unnecessary complexity in payment schedules, and standardized payment systems at After Play. The new online consumer engagement, digital quality promotions, and new digital quality features have to rise with higher levels of investments (Kumari et al., 2023).

Question 4 Future of After-pay

A) AfterPay conditions since 2020, performance during and after the COVID-19 lockdown in Australia

- After play platform business is highly competitive advantage turning credit traditional into new debit business, changing regulation of banks aspects variedly.

The company has charged commissions from retailers worth 6% of transaction value, charging drops of risk and price of customers providing new superior value levels functionally.

- The company has become Fintech Giant worth over $30 billion within market capitalization in 2020, signifying major new demands rising in business levels (Hughes, 2018). The After-pay condition post-COVID economic downturn can be analysed with the threat of economic downturn, being a major threat to the company's booming success levels. The company has faced high competition from various new entrants in business-changing market scenarios; consumers are highly trusting AfterPay commercial services.

- There is also attention on fact that merchants will be benefiting in recession, with excess inventory and risen consumers retailers engagement in BNPL system commercially (Powell et al., 2023). However, some major players like PayPal and Alibaba Kalma are some major stiff competitors of After Play which are rising in demand in the BPNL industry.

B) Analysis of recent regulation changes impacting business of AfterPay

- AfterPay has turned traditional credit card system into new debit appraisal service, escaping new regulations at banks that to be further abided by. Instead of receiving interest from consumers, the brand has focused on specifically leading change into charging commission from retailers of around 6% within new transaction values (Financial Review. 2018)

- The recent regulation changes in the BPNL industry on AfterPay have led to change drops in risk and price for consumers focused on providing new superior value propositions successively on wider standards. After play slogan “There’s nothing to pay” led rise to hundreds and thousands of millennial, consumers in Australia purchasing goods with high flexibility.

C) Innovation since 2020

- The company's striking new commercial deal with one of the most prominent US retail giant in the middle of year 202, that has grown to be wider resurge on share price value within market scenarios.

- AfterPay like most competitors aims to reduce losses, the average price/ revenue ratio hovering around new 50 forms within rising technical changes within rising share price. Playing a multi-sided platform business, within a new marketplace enhances attention on aversion to credit cards and grows in an ecosystem (CHOICE, 2018).

- After the Play model has been flipping economies of credit cards, a business model is highly creative technically for incorporating new submission levels. This simple product offering is highly attractive, as repayment rescheduling is based on a flat fee structure for missed payments.

References

Reports

BUS5DWR Data Wrangling and R Report 2 Sample

Assignment Details

Objective:

The main goal of this assignment is to perform a comprehensive analysis of the Dubai Real Estate Transactions of first six months in 2023 using R. Through careful data wrangling and analytical techniques, you will extract meaningful insights about the Dubai Real Estate market.

Your analysis will focus on:

- Identify trends in the types and volumes of real estate transactions.

- Highlight price trends across different property types and neighborhoods.

- Assess market activity over time, analyzing transaction fluctuations.

- Provide strategic recommendations based on your findings that can help guide real estate investment and development decisions.

Part 1: Data Wrangling & Exploration

1.1 As a data analyst, write a brief description (1 paragraph) on your initial approach to extracting meaningful insights from the Dubai Real Estate Transaction dataset. What challenges might arise during the early stages of analysis?

1.3 Identify columns with missing values. Instead of removing rows, explain two methods you would use to handle missing data related to the columns you identified and apply one method to fill in missing values for one selected column and show the dataframe.

1.4 In real-world datasets, inconsistencies in data are common and need to be addressed. The room column appears to have some inconsistencies. Analyze the data and fix the inconsistencies.

1.5 You are interested in counting the number of sales matches the below criteria:

1.6 Identify potential outliers in the amount column. Choose and apply an appropriate method for dealing with these outliers. Explain why you chose this method. (2 marks)

1.7 Create two new columns that extract the day, month from the transaction date

1.8 Define a function in R that takes the property size in square meters (property size sqm) as input and categorizes properties into three categories:

1.9 Group the data by property type and calculate the average property size sqm for each property type. Provide a brief insight based on the results. (3 marks)

1.10 Calculate the average propety sale price categorized by property subtype and present the results in descending order. What insights can be derived from this analysis?

Part 2: Market Trend Analysis & Strategic Insights

2.1 Use a histogram to analyze the distribution of the amount (property sale price) .

(a) Use a histogram to visualize the distribution of property sale amounts. Ensure the bins are appropriately adjusted to highlight key patterns in the data.

(b) Based on the histogram, provide insights into the distribution of property sale amounts. Are there any noticeable patterns or trends?

2.2 Using appropriate visualization (i.e bar graph), analyze transaction count based on room types.

(a) Create an appropriate visualization to view the transaction count based on room types (e.g., 1 B/R, 2 B/R, studio). Ensure the graph clearly shows the transaction count for different room types.

(b) Analyze the visualization and discuss the insights based on visualisation. 2.3 Using appropriate visualisation/s, analyze transaction count for the top 10 areas.

2.3 You are interested in analyzing whether the nearest landmark has a significant impact on the amount (property sale price) and the subtype of properties (i.e Flat, villas) sold in these areas.

(a) Using appropriate visualisation/s, compare property sale prices based on different nearest landmarks. Ensure the visualization clearly displays any patterns or differences.

(b) Is the dominant property subtype consistent across all landmarks, or does it vary depending on the proximity to different landmarks? (Displaying the data of dominant property subtype is sufficient)

2.4 You are interested in analyzing the monthly sales of top 10 property subtypes (e.g., flats, villas, townhouses) to discover insights. Using the new month column created from transaction_date, visualize the total number of property sales per month, based on top 10 property subtypes.

(a) Using appropriate analytic and visualization techniques analyse monthly sales grouped by property subtypes.

(b) Based on the visualizations, discuss any noticeable trends or insights you can extract.

Solution

Introduction

The Dubai real estate market, which has grown and developed swiftly and includes various transactions, calls for insights based on existing research. This case study is based on a transactional analysis of data gathered on the property market within the first half of 2023. Knowledge of these mechanisms is essential for making correct investment choices and forecasting a perspective evolution of quotes.

Objectives

- To assess patterns of transactions and define trends in the Dubai real estate market.